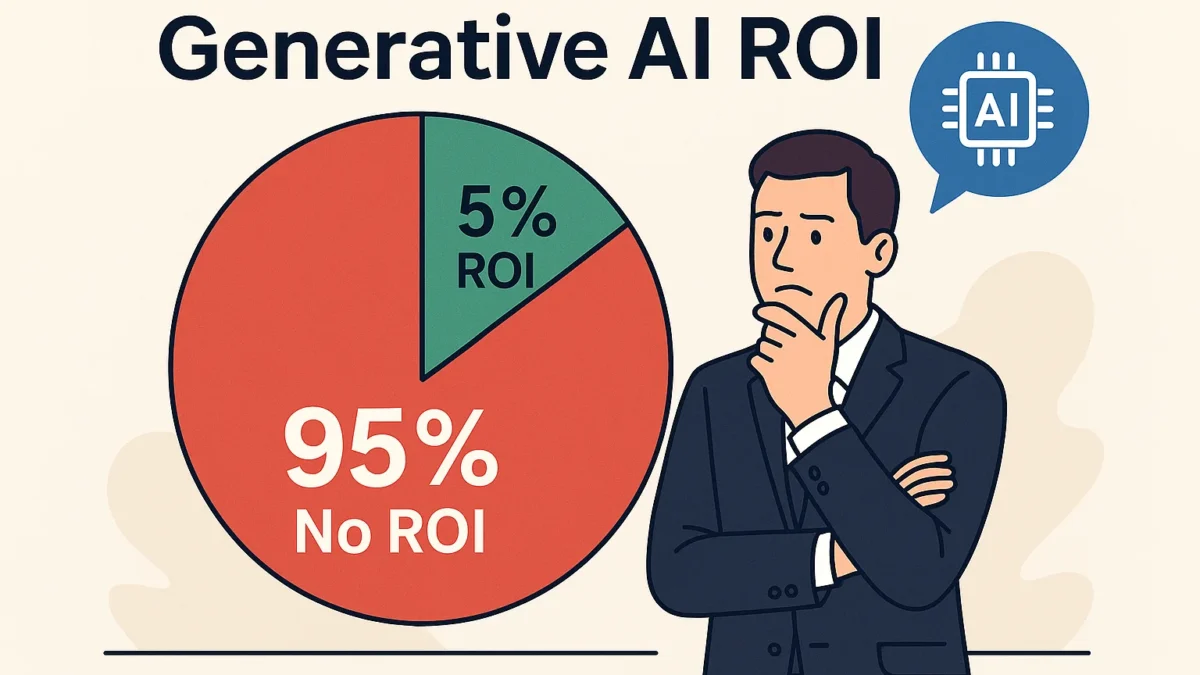

Generative AI ROI is under intense scrutiny after coverage of a new MIT analysis suggested that roughly 95% of enterprise pilots show no measurable return. If you’ve trialed assistants, copilots, or chatbots, that statistic may feel uncomfortably familiar. This guide unpacks why Generative AI ROI often lags and gives you a pragmatic, step-by-step playbook to turn promising demos into durable business value.

Table of Contents

- MIT’s Key Findings (with chart)

- Why Generative AI ROI Is Struggling

- Real-World Case Studies: Failures & Wins

- Is There an “AI Bubble”? Market Context

- 7-Step Playbook to Improve Generative AI ROI

- Industry Comparison: Expectations vs Reality (with chart)

- 90-Day Execution Roadmap (People • Process • Tech)

- KPI Framework & Benchmarks to Prove Generative AI ROI

- Build vs Buy: How to Choose for Faster ROI

- What’s Next for Generative AI ROI (with trend chart)

- Related Guides on NestOfWisdom

- FAQ

- References

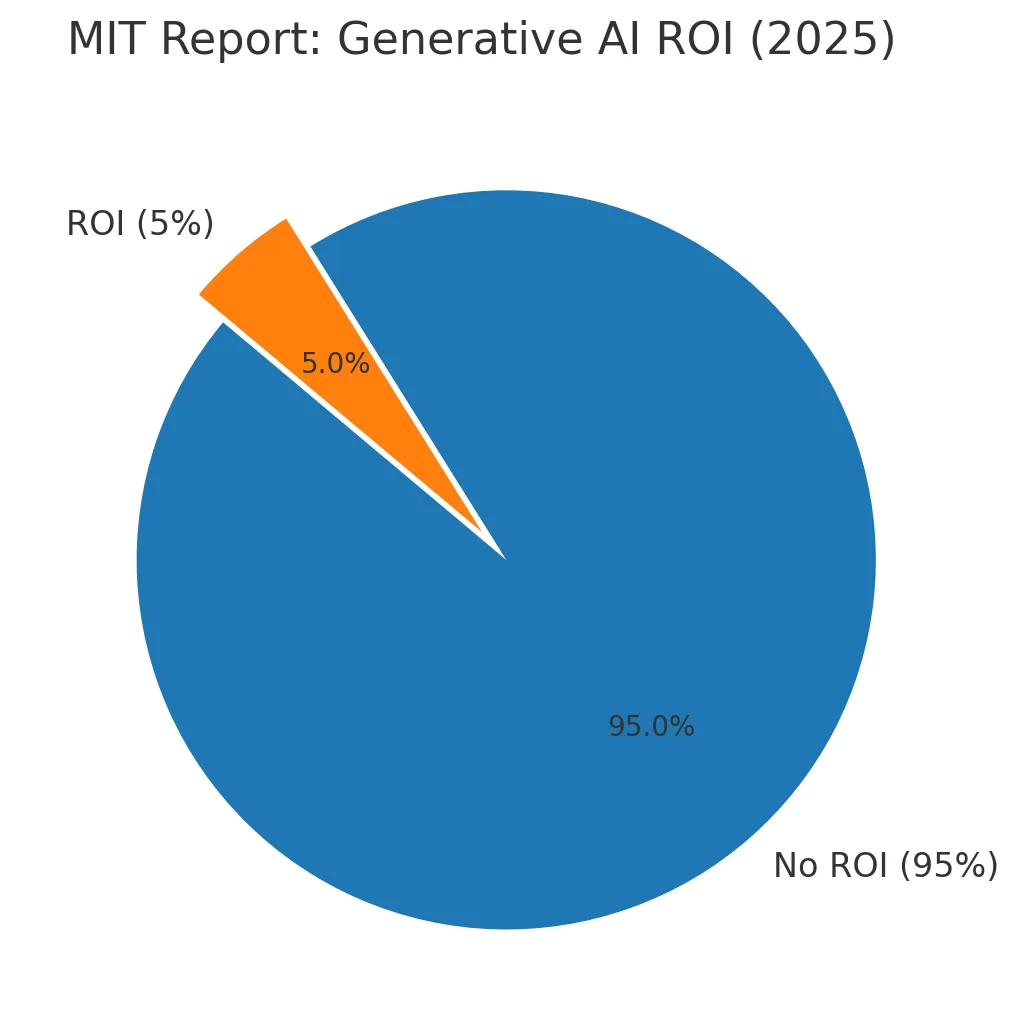

MIT’s Key Findings (with chart)

MIT’s NANDA initiative (The GenAI Divide: State of AI in Business 2025) has been widely reported as finding that about 95% of generative AI pilots have not yet produced measurable business impact, with ~5% demonstrating clear value or making it into scaled production. Importantly, the analyses emphasize execution gaps—problem selection, integration, measurement, and change management—rather than “AI doesn’t work.”

Why Generative AI ROI Is Struggling

1) Misaligned Use Cases

Budgets often chase shiny, customer-facing chat experiences. But the quickest path to Generative AI ROI usually sits in back-office workflows: document intake and classification, compliance drafts, policy summarization, customer support deflection, finance close support, and knowledge retrieval. These are measurable, high-volume tasks with clear owners and SLAs.

2) Integration & “Memory” Gaps

Standalone bots demo well but fail in production when they can’t remember or reference enterprise context. Without retrieval-augmented generation (RAG), policy prompts, audit logs, and read/write integration to CRM/ERP/ITSM, assistants stay toy-grade. Durable value requires wiring AI into systems of record and surrounding it with governance.

3) Culture & Process Debt

GenAI amplifies whatever process it touches. If workflows are inconsistent, data is messy, or review steps are missing, the model inherits that debt. ROI emerges when organizations deliberately redesign processes, clarify human-in-the-loop gates, and standardize quality bars.

4) Adoption ≠ Impact

Usage metrics (logins, prompts) are not outcomes. Generative AI ROI requires instrumentation: cycle time, error rate, rework, cost-per-case, deflection rate, and margin. No metrics → no ROI narrative → no funding to scale.

5) Skill Mismatch

Teams need practical skills: problem framing, prompt patterns, evaluation methods, safe-use policy, and handoff design. Well-scoped tasks with guardrails consistently outperform free-form “ask me anything” deployments.

Real-World Case Studies: Failures & Wins

Case Study A — The Pilot Graveyard

A global services firm launched 20+ pilots in sales enablement and marketing content. Demos wowed leadership; none cleared security reviews or SLA thresholds. Post-mortem: no integration to CRM and ticketing, fuzzy acceptance criteria, and zero KPI baselines. Lesson: define KPIs and enterprise integrations before building.

Case Study B — Back-Office Automation Pays

A manufacturing enterprise shifted budget from website chat to vendor due-diligence summaries, invoice exception handling, and HR knowledge retrieval. The result: fewer manual hours, faster turnarounds, audit-friendly trails—and visible Generative AI ROI within one quarter.

Case Study C — Developer Productivity with Guardrails

With curated repos, policy prompts, unit-test scaffolds, and review gates, an engineering org boosted throughput on boilerplate code, migrations, and refactors—while lowering defect rates due to automated checks.

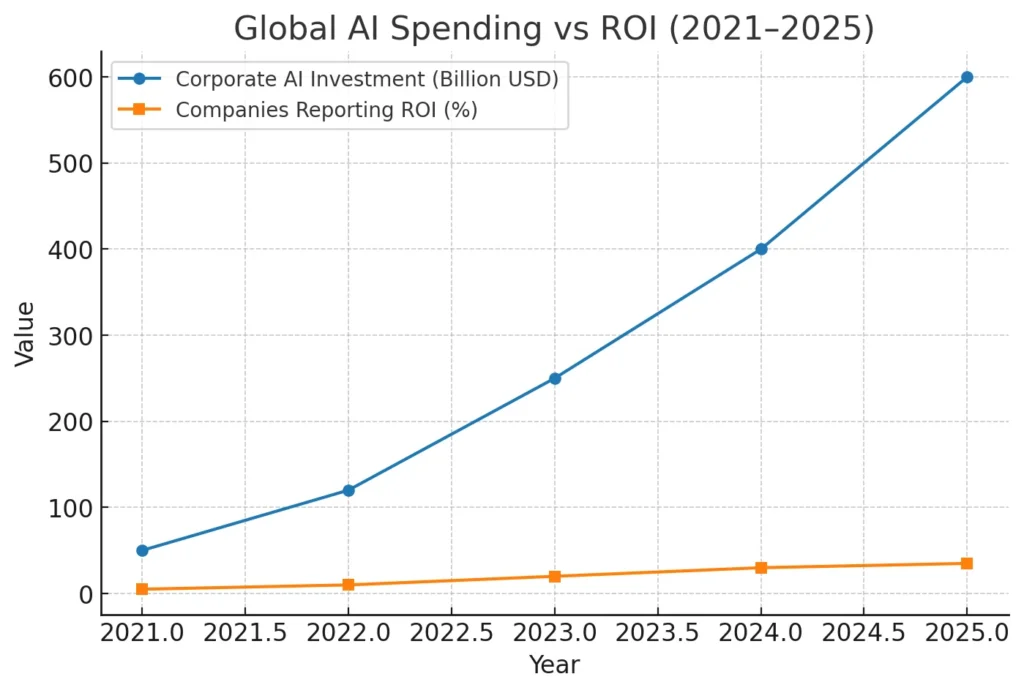

Is There an “AI Bubble”? Market Context

Markets briefly wobbled after the “95%” headline, but the signal for operators is simpler: investment outpaced operational readiness. The fix is not retreat—it’s refocus. Triage to measurable work, make integration the star, and report outcomes like any other transformation program.

7-Step Playbook to Improve Generative AI ROI

- Start where money leaks. Identify 3–5 measurable bottlenecks (e.g., case turnarounds, exception queues, rework). Frame each as a hypothesis: “We will reduce cycle time by 30% for X process.”

- Instrument for impact. Establish baselines (cost-per-case, cycle time, error rate) and target deltas. Connect telemetry to BI so leaders can see the before/after in real time.

- Design for memory + integration. Use RAG with policy prompts, document redaction, and audit logs. Enable safe write-backs to ERP/CRM/ITSM so AI output actually moves work forward.

- Blend GenAI with proven analytics. Pair language models with rules, analytics, and optimization. For example, let GenAI draft a response while a rules engine verifies compliance fields.

- Right-skill the work. Train teams on reusable prompt patterns, evaluation, and fallback flows. Reward process improvements, not just clever prompts.

- Govern for safety and scale. Track drift, hallucinations, PII exposure, and escalation rates. Run red-team exercises and model cards; review incidents like any other production system.

- Prove it, then expand. Roll out in phases with A/B or stepped-wedge designs. When KPIs beat thresholds, expand to neighboring processes; if not, fix the bottleneck or kill fast.

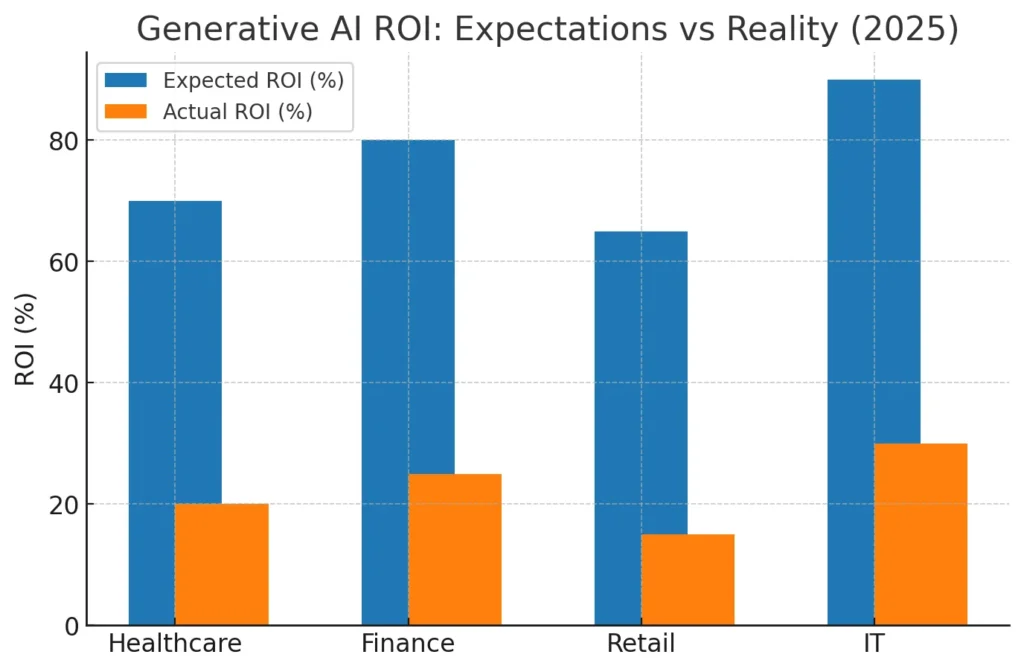

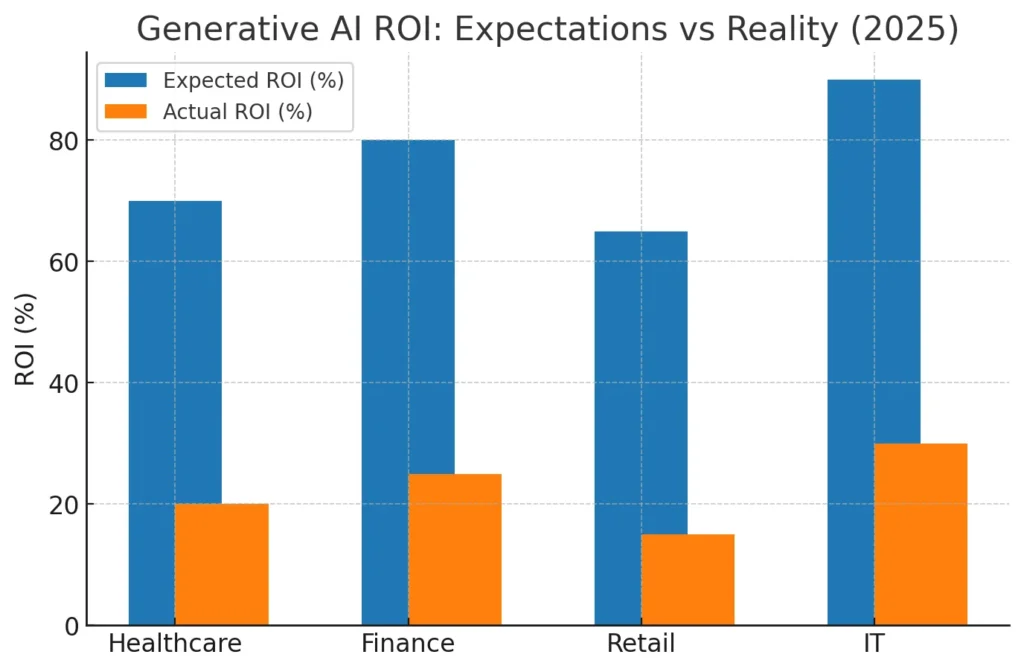

Industry Comparison: Expectations vs Reality (with chart)

IT and media often show earlier wins (cleaner data, faster iteration). Regulated sectors—healthcare and finance—move carefully due to compliance, audit, and data sensitivity. Retail and logistics see traction in knowledge retrieval, catalog ops, and support deflection. In every case, realized Generative AI ROI correlates with data readiness, integration depth, and disciplined measurement.

90-Day Execution Roadmap (People • Process • Tech)

Days 0–30: Discovery & Baselines — Inventory 10–20 candidate use cases; score by volume, rework cost, SLA pain, and data availability. Select the top 3. Map the current workflow, define acceptance criteria, and capture baseline KPIs (cycle time, cost, error).

Days 31–60: Prototype & Integrate — Build thin-slice pilots with retrieval, policy prompts, and audit logging. Connect to read APIs first (CRM/ERP/ITSM). Establish human-in-the-loop checkpoints and a red/green decision for each step. Start weekly KPI reviews.

Days 61–90: Hardening & Rollout — Add write-backs with guardrails. Create runbooks, model cards, and fallback paths. Launch a controlled rollout (10–20% of traffic). If KPIs beat thresholds, scale; if not, remediate or retire.

KPI Framework & Benchmarks to Prove Generative AI ROI

- Throughput: Cases/agent/day; % automated summaries classified correctly.

- Cycle Time: Median & P90 minutes from intake to resolution.

- Quality: Defect rate, rework %, compliance flags, escalation rate.

- Cost: Cost-per-case, BPO hours avoided, infrastructure $/successful task.

- Adoption: Active users, tasks per user, assist acceptance rate.

- Satisfaction: CSAT for assisted tickets; internal NPS for users.

Tip: present a single “ROI scorecard” monthly—before/after bars for each KPI, plus a short narrative tying changes to business outcomes. That is how you transform anecdote into Generative AI ROI that finance teams can back.

Build vs Buy: How to Choose for Faster ROI

Buy (platform or vertical tool) when your workflow is common (support, procurement, HR knowledge), integrations are ready-made, and speed is paramount. Evaluate on: integration catalog, retrieval quality, governance, pricing transparency, and exportability of your data.

Build when your process is differentiating, data is proprietary, or you need bespoke controls. Use modular architecture: vector/RAG layer, prompt library, evaluation harness, and an observability stack. Even then, consider buying the plumbing (auth, observability) and focusing your build on the last-mile experience.

What’s Next for Generative AI ROI (with trend chart)

Adoption keeps rising, but value accrues to teams that treat GenAI like any production system: iterate against KPIs, integrate deeply, and govern continuously. Expect the pilot-to-production gap to narrow as patterns (RAG, policy prompts, evaluation harnesses) standardize. The winners will target automatable, measurable work—and report Generative AI ROI in the language of the P&L.

Related Guides on NestOfWisdom

- Custom Digital Services — practical automations for small businesses.

- AI Business Insights — more strategy and ROI explainers.

- Contact — talk to us about an ROI-first GenAI rollout.

FAQ

Does “95%” mean Generative AI ROI is impossible?

No. It signals that most pilots haven’t been measured or integrated well enough yet. When you start with back-office pain, wire to systems of record, and track KPIs, Generative AI ROI is achievable.

What are the fastest win areas?

Document intake and classification, compliance drafts, knowledge retrieval, and support deflection—work with high volume, clear quality bars, and existing SLAs.

How much does measurement matter?

It’s the difference between “cool demo” and funded program. Baselines, targets, and an ROI scorecard turn usage into evidence.

Is a customer-facing chatbot the best place to start?

Not usually. Customer chat is brand-visible but integration-heavy and risk-sensitive. Most teams earn early Generative AI ROI by automating internal work first, then layering customer experiences.

What’s one change we can make this month?

Pick one workflow with a backlog problem (e.g., invoice exceptions). Stand up retrieval, draft responses with policy prompts, add a review gate, and measure the cycle-time delta for 30 days.

References

- Fortune — MIT report: 95% of gen-AI pilots at companies are failing (Aug 18, 2025).

- Financial Times (Unhedged) — Tech ‘sell-off’ & MIT report context (Aug 21, 2025).

- Investor’s Business Daily — Why the MIT study pressured AI stocks (Aug 21, 2025).

- McKinsey — The State of AI (2025) overview and full report (PDF).

- Harvard Business Review — The AI Revolution Won’t Happen Overnight (Jun 24, 2025).

- Harvard Business Review — Will Your GenAI Strategy Shape Your Future or Derail It? (Jul 25, 2025).

Conclusion: The story isn’t that AI “doesn’t work”—it’s that value requires focus, integration, and proof. Start with measurable pain, wire assistants into the flow of work, and report outcomes like any other transformation. That’s how you convert pilots into Generative AI ROI your board will back.

Nest of Wisdom Insights is a dedicated editorial team focused on sharing timeless wisdom, natural healing remedies, spiritual practices, and practical life strategies. Our mission is to empower readers with trustworthy, well-researched guidance rooted in both Tamil culture and modern science.

இயற்கை வாழ்வு மற்றும் ஆன்மிகம் சார்ந்த அறிவு அனைவருக்கும் பயனளிக்க வேண்டும் என்பதே எங்கள் நோக்கம்.

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

Related posts

Today's pick

Recent Posts

- Internal Linking Strategy for Blogs: A Practical, Human-Centered Playbook

- AI in the Automotive Industry: A Practical, Human-Centered Guide

- Cloud Tools for Small Businesses and Freelancers: The Complete Guide

- Generative AI in Business: Real-World Use Cases, Benefits & Risks

- 7 Life-Changing Daily Habits for Weight Loss Without Dieting