Apple Intelligence iOS 26 is about one thing: a calmer, more capable day. It’s the empathetic rewrite that defuses a tense email, the five-bullet summary that clears a foggy thread, and the ear-level translation that keeps eye contact in a crowded shop. This guide turns features into habits—so Apple Intelligence iOS 26 becomes an invisible helper rather than another screen to manage. இது வெறும் தொழில்நுட்பம் அல்ல—உங்கள் தினசரி வாழ்வின் தோழன்.

Table of Contents

- The Big Picture: What Is Apple Intelligence iOS 26?

- Why This Update Matters (Cultural + Productivity)

- Star of the Show: AirPods Live Translation

- Privacy & Architecture (On-Device vs Private Cloud Compute)

- Real-World Scenarios: Work, Travel, Accessibility, Family

- Case Study: Chennai Family, Paris Trip

- Quick Start: 5-Minute Setup + Prompts

- Should You Upgrade Now or Wait?

- Compatibility Snapshot: Devices & Languages

- Known Limitations & Workarounds

- How Apple Stacks Up (Google, Samsung & Ear-Level AI)

- Cultural Impact: Confidence, Language Pride & Everyday Dignity

- Psychology of Trust: Why Privacy-First Design Matters

- Future Roadmap: What the Next Wave Could Bring

- 30-Day Adoption Plan

- Best Practices for Productivity (Pros, Students, Families)

- Recommended Reading on NestOfWisdom (Internal Links)

- External References

- FAQ

- Conclusion

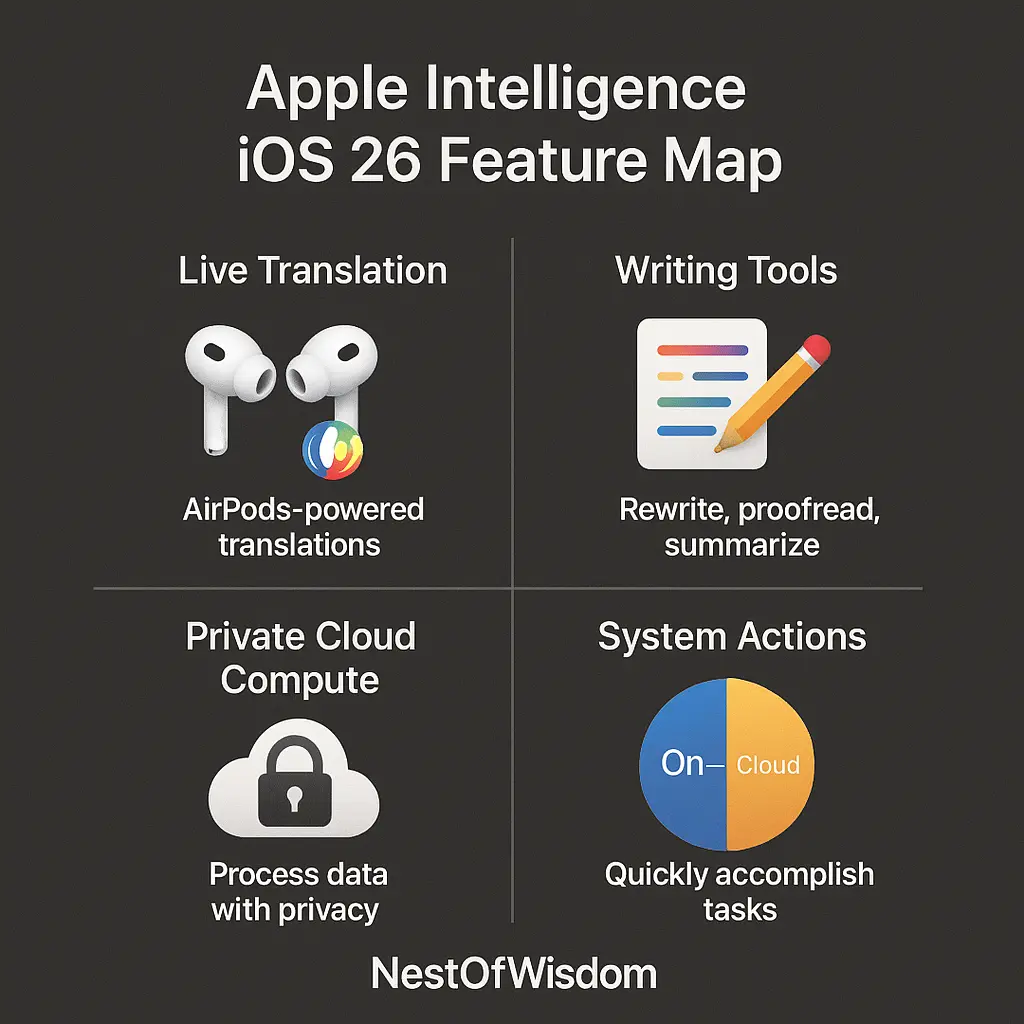

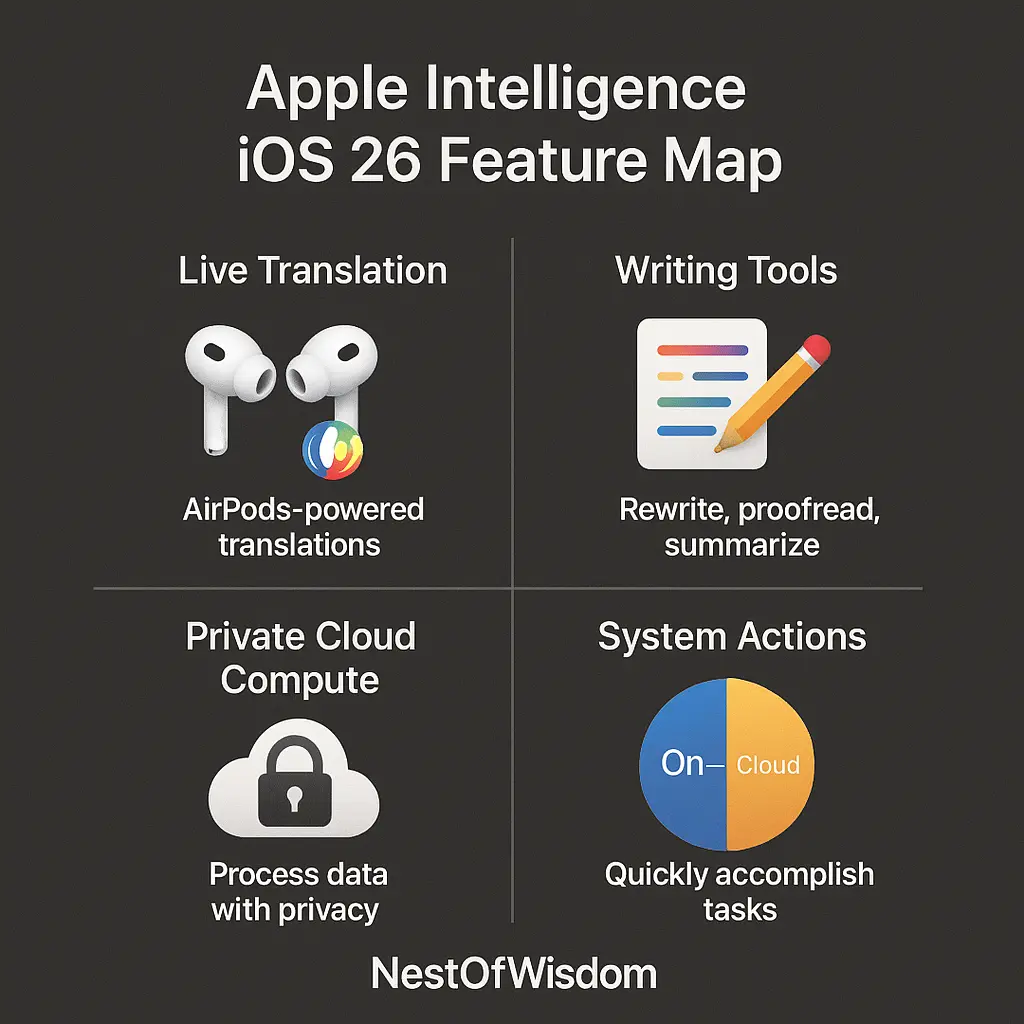

The Big Picture: What Is Apple Intelligence iOS 26?

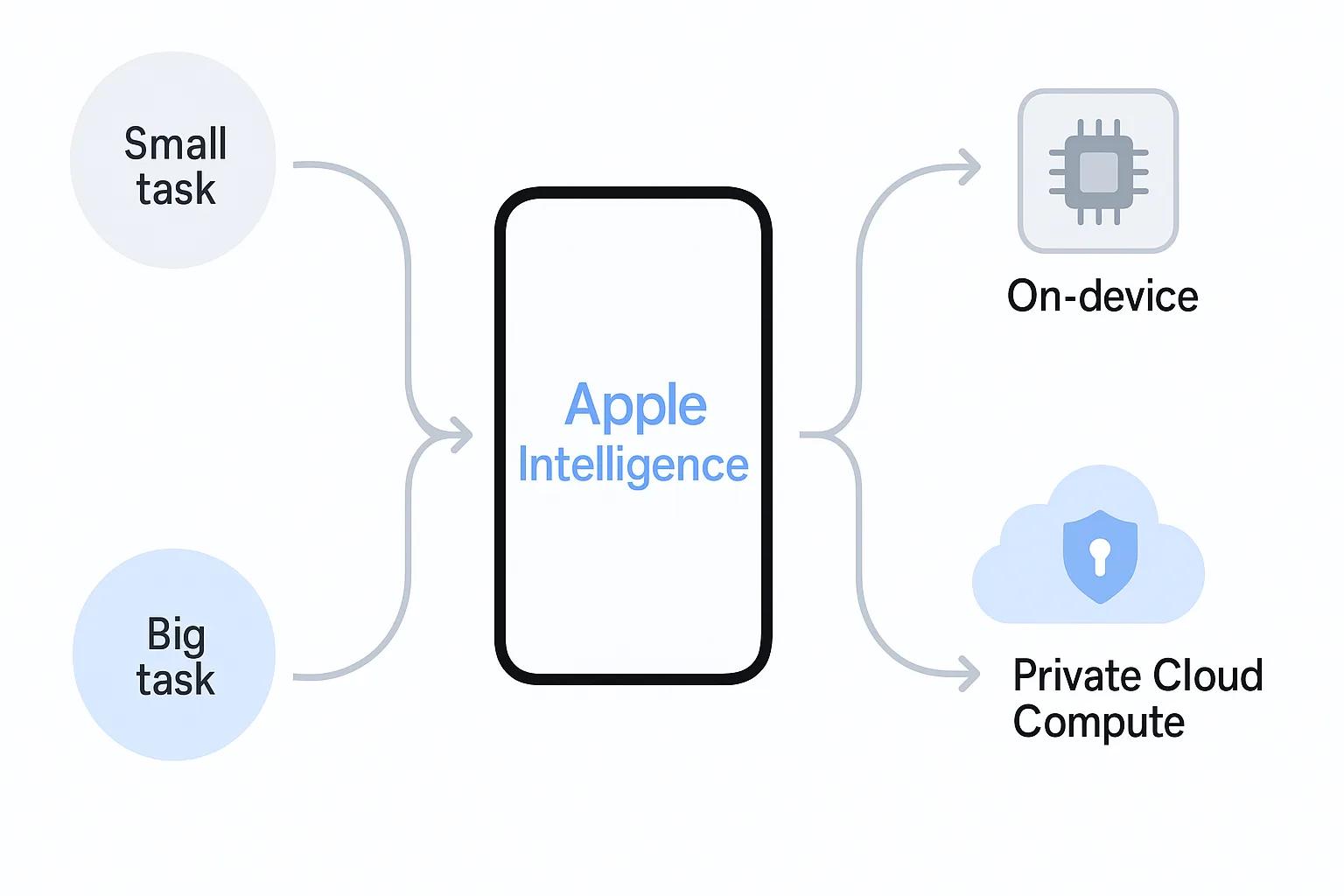

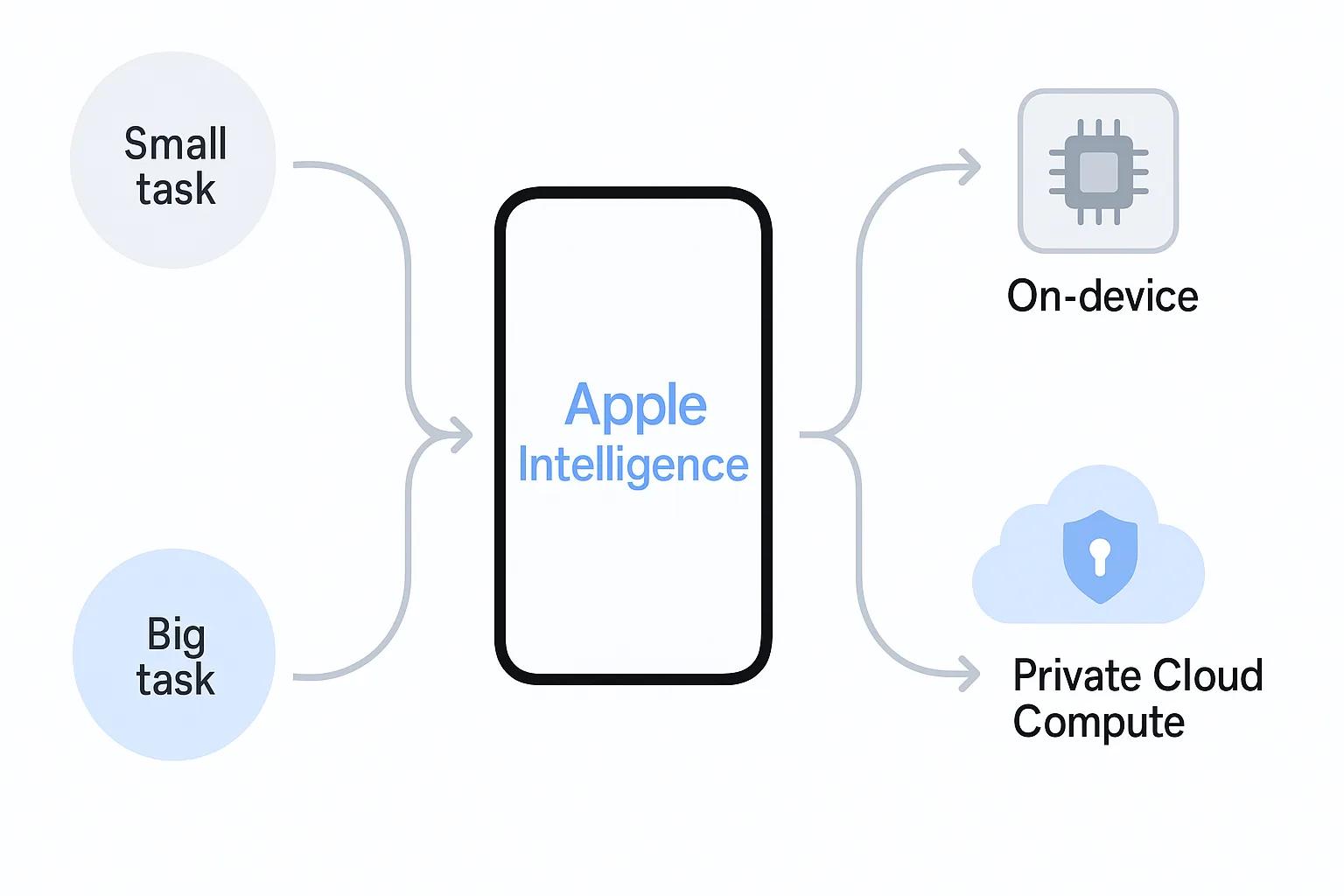

Apple Intelligence iOS 26 weaves assistive and generative AI into core iPhone flows: rewrite text in Mail, summarize Notes, suggest context-aware actions in Messages, and translate at your ears with AirPods. The design is hybrid—light tasks run on device for speed and privacy; heavier requests escalate to Private Cloud Compute on Apple silicon servers. This keeps Apple Intelligence iOS 26 fast, respectful, and native. Fewer app switches, more ambient help, less friction.

தமிழில் சொன்னால்: “அதிக வேலை, குறைந்த சிரமம்.” With Apple Intelligence iOS 26, you do more by doing less.

Why This Update Matters (Cultural + Productivity)

- Moments, not menus: Apple Intelligence iOS 26 puts writing tools where you type; summaries where you read; actions where you decide.

- Ear-level translation: Conversations feel human again—no passing a phone back and forth.

- Privacy by default: On-device first; cloud only when needed—foundational to trust.

- Everyday polish: Reader mode, calmer notifications, smarter images—small wins that add up.

“புது கருவி நம்மை சுலபப்படுத்தினால் தான் அது உண்மையான முன்னேற்றம்.” That’s the spirit of Apple Intelligence iOS 26.

Star of the Show: AirPods Live Translation

The translator moves from your screen to your ears. With Apple Intelligence iOS 26, you speak naturally; iPhone transcribes; translation reaches your AirPods quickly; your reply is rendered back in the other person’s language. The difference isn’t just latency—it’s flow. You look at faces, not phones.

- Both parties speak normally; iPhone handles transcription.

- Translation streams to your AirPods with minimal delay.

- Your reply returns in their language via speaker or paired device.

தமிழ் உதாரணம்: “Bonjour!” becomes “வணக்கம்!” for you; your Tamil reply returns as French for them. மொழி ஒரு தடையல்ல—Apple Intelligence அதை பாலமாக்குகிறது.

Privacy & Architecture (On-Device vs Private Cloud Compute)

Apple Intelligence iOS 26 prioritizes on-device processing for speed and privacy. Short rewrites and quick summaries typically stay local; heavier requests escalate to Private Cloud Compute (PCC). PCC runs on Apple silicon servers with scoped access and verifiable protections; data used to fulfill your task is not retained to train general models. In human terms: your iPhone does as much as possible itself, and calls for help only when necessary—carefully, briefly, and transparently.

உங்கள் தனிப்பட்ட தரவு உங்களுடையது. That promise underpins Apple Intelligence iOS 26 adoption in real life.

Real-World Scenarios: Work, Travel, Accessibility, Family

Work: Summarize a 30-message email thread, draft a reply in your tone, attach a doc, and schedule a follow-up—without leaving Mail. With Apple Intelligence iOS 26, this becomes a morning ritual rather than a time sink.

Travel: Ear-level translation makes ordering food, confirming fares, or asking directions easier—especially when stress and noise peak. Apple Intelligence iOS 26 reduces the awkwardness of device-passing.

Accessibility & Learning: Clarifying summaries help learners; live translation builds confidence for multilingual families. “கற்றல் எளிதாகும்—அதுவே நம்பிக்கை.”

Family life: After delicate calls, ask for a “warm but brief” rewrite before texting elders—small care, big respect.

Case Study: Chennai Family, Paris Trip

Arun and Meera travel with their mother Lakshmi from Chennai to Paris. Arun speaks English and Tamil; Meera knows basic French. At a café, Arun orders in Tamil; with Apple Intelligence iOS 26, AirPods return French for the barista. Later, a museum guard explains route changes in French; Arun hears Tamil; Meera catches key terms to confirm. No device-passing; no crowd stress. Just human conversation with Apple Intelligence iOS 26. “அன்பே மொழி.”

Back at the hotel, Arun says: “Summarize today’s 25-message thread and draft a friendly ‘thanks + next steps’ reply.” Apple Intelligence iOS 26 proposes a concise, warm email. He edits one line and hits send. Friction leaves; life returns.

Quick Start: 5-Minute Setup + Prompts

- Update to the latest Apple Intelligence iOS 26 build; ensure AirPods firmware is current.

- Enable translation; download offline packs for key languages.

- Review Privacy & Security; keep on-device-first defaults.

- Turn on dictation with auto-punctuation; allow hands-free Assistant.

Prompts that save time (for Apple Intelligence iOS 26):

- “Rewrite this shorter but warm: [text]” — tone without losing empathy.

- “Summarize this page in 5 bullets I can act on.” — Reader → action.

- “Translate and read aloud in Spanish: [phrase]” — practice before you speak.

- “After this call, remind me to send the proposal.” — action + reminder chain.

தமிழ் குறிப்பினர்: “சுருக்கம் + அடுத்த படிகள்” எனச் சொல்லுங்கள்—நேரடி செயல் புள்ளிகள் தரும் in Apple Intelligence iOS 26.

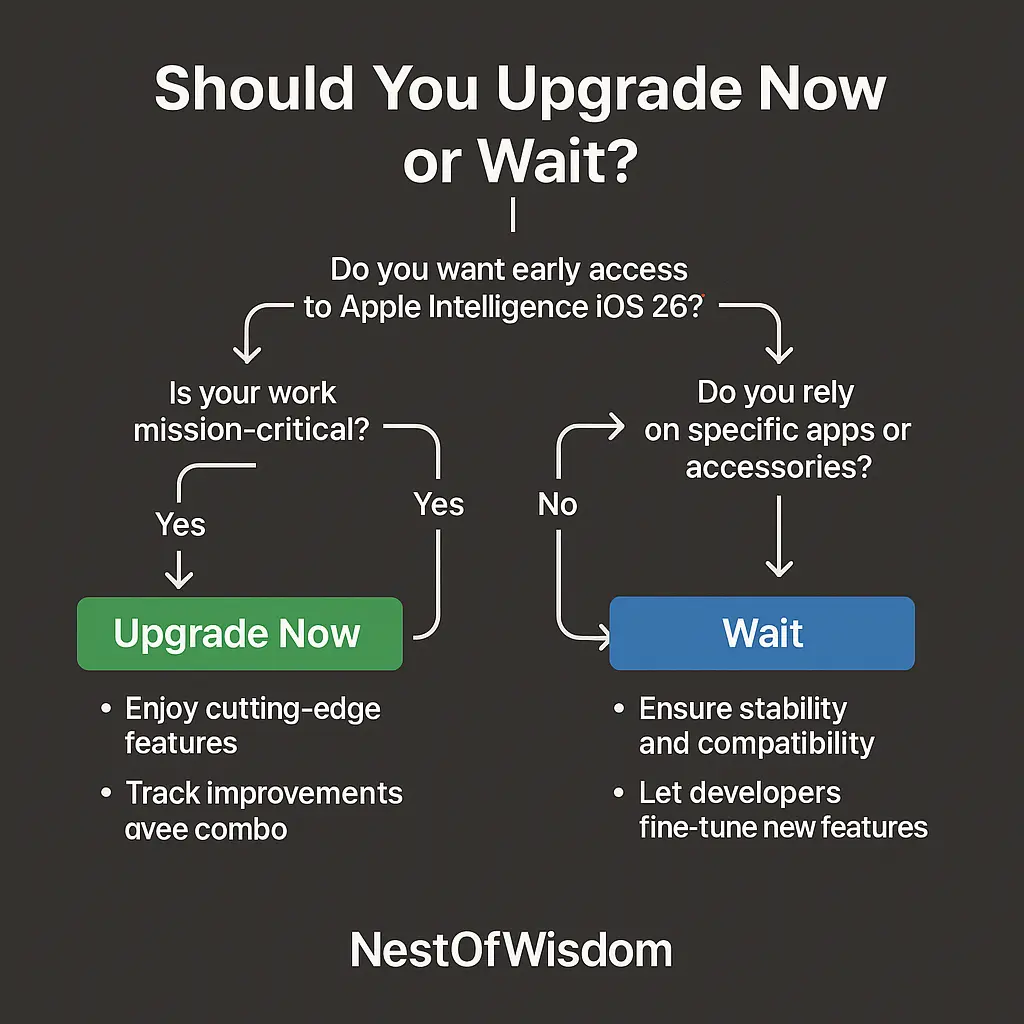

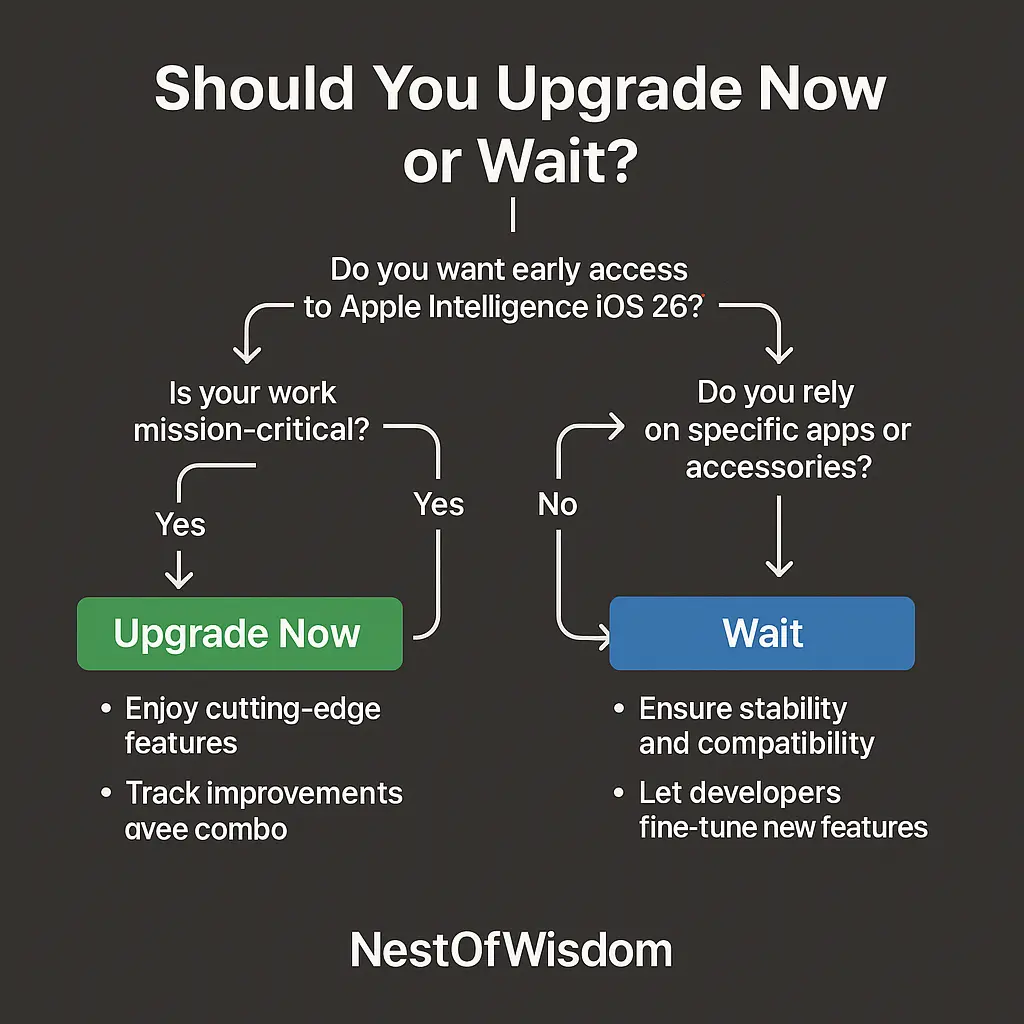

Should You Upgrade Now or Wait?

If your phone is mission-critical, wait for 26.1; point releases often polish stability and expand language/device coverage. If you enjoy exploring and your workflow is flexible, upgrade now and dive into writing tools + translation in Apple Intelligence iOS 26. Either way, the habits below will compound.

Compatibility Snapshot: Devices & Languages

Devices: Recent iPhones paired with current-gen AirPods deliver the best latency and audio. Older models may see higher delay or a subset of features in Apple Intelligence iOS 26.

Languages: Major pairs launch first; coverage grows with point releases. For trips depending on less common languages, verify support in Settings before relying on ear-level translation in Apple Intelligence iOS 26. “பயணத்திற்கு முன்—மொழி பட்டியலைச் சரிபார்க்கவும்.”

Known Limitations & Workarounds

- Connectivity: Real-time translation is better online. Work-around: download offline packs; star essential phrases.

- Noise: Very loud spaces degrade transcription. Work-around: step aside or use device speaker for near-field pickup.

- Third-party lag: Some app hooks arrive later. Work-around: bridge via share sheets + Notes/Mail for now.

- Battery: Continuous speech + translation uses power. Work-around: carry a compact battery; enable Low Power Mode.

How Apple Stacks Up (Google, Samsung & Ear-Level AI)

Interpreter modes exist across ecosystems, but Apple Intelligence iOS 26 stands out for two reasons: the privacy model (on-device first; PCC when needed) and the native feel of system hooks. If Apple keeps execution tight, ear-level translation becomes an expectation—not a novelty—especially for mixed-language teams and frequent travelers.

தமிழ் சுருக்கம்: “ஆர்வம் மட்டும் போதாது; நம்பிக்கை + பாதுகாப்பு முக்கியம்”—that’s why many will choose Apple Intelligence iOS 26.

Cultural Impact: Confidence, Language Pride & Everyday Dignity

Language is dignity. With Apple Intelligence iOS 26, you look at a person’s face, not a screen. For elders, shopkeepers, and drivers, that eye contact is respect. For children, it’s courage to try a new tongue. For families that move between Tamil and English daily, it’s relief. “அன்பே மொழி.” When technology protects privacy and builds confidence, people actually use it in intimate moments where it matters most.

Psychology of Trust: Why Privacy-First Design Matters

People adopt what they trust. Because Apple Intelligence iOS 26 defaults to on-device processing and explains cloud escalation, you won’t hesitate to use it for personal topics—health, family, finances. That’s where saved minutes compound into lower stress and fewer mistakes. “பாதுகாப்பே பயன் அதிகரிக்கும் பாலம்.”

Future Roadmap: What the Next Wave Could Bring

- Deeper Shortcuts + Apple Intelligence for one-phrase automations in Apple Intelligence iOS 26 and beyond.

- More languages and dialect nuance; better register control (formal/informal).

- Richer on-device models allowing more Apple Intelligence iOS 26 tasks to work offline.

- Cross-device continuity: pick up a translation or summary flow on iPad/Mac.

30-Day Adoption Plan

Week 1 — Explore & Baseline

- Update devices; confirm AirPods firmware; download key language packs.

- Test two safe scripts (ordering coffee, asking directions) with Apple Intelligence iOS 26.

- Use three rewrite/summary tasks on real emails.

Week 2 — Daily Workflows

- Summarize two long articles + one email thread with Apple Intelligence iOS 26; save 10–15 minutes.

- Build a “translation favorites” list (phrases for travel or guests).

- Create one Shortcut: summary → reminder → calendar note.

Week 3 — Travel & Meetings

- Pilot ear-level translation in two real settings (counter, taxi stand).

- Note phrasing that works best; mark quiet spots for better pickup.

- Try image assistance to extract text from signage; log one time-saver use.

Week 4 — Review & Lock-In

- List top three features you actually used in Apple Intelligence iOS 26; surface them via Settings/Shortcuts.

- Pick your update rhythm (immediate vs point releases).

- Teach a 60-second setup to a family member or colleague.

Best Practices for Productivity (Pros, Students, Families)

- Pros: Morning triage: summarize overnight threads, draft two replies, schedule one follow-up—powered by Apple Intelligence iOS 26.

- Students: Ask for “explain like I’m 12” rewrites; create study bullets; practice pronunciation with read-aloud.

- Families: After calls, say “remind me to send the school form”—one micro-action per conversation in Apple Intelligence iOS 26.

- Travelers: Star key phrases; pre-practice in read-aloud mode to tune your ear.

தமிழ் நினைவூட்டு: “சிறு பழக்கங்கள்—பெரிய மாற்றம்.” Build small habits inside Apple Intelligence iOS 26 for big gains.

Recommended Reading on NestOfWisdom (Internal Links)

- Cloud Tools for Small Businesses and Freelancers: The Complete Guide — workflow, automation, and privacy practices that complement Apple Intelligence iOS 26.

- AI Copilots at Work: 9 Powerful Ways Small Teams Can Do More with Less — everyday use cases that pair with Apple Intelligence iOS 26 summaries and rewrites.

- Best AI Productivity Tools: Top 10 Must-Have Apps for Daily Life — build a lean stack around Apple Intelligence iOS 26 routines.

- Generative AI in Business: Real-World Use Cases, Benefits & Risks — executive-level framing for deployment and governance.

External References

- Apple Intelligence — Official Overview

- Apple Security Blog — Private Cloud Compute

- Private Cloud Compute — Security Guide & Docs

- WIRED — How Apple’s Private Cloud Compute Works

FAQ

Does AirPods live translation require the newest iPhone?

Best performance comes from recent iPhones paired with compatible AirPods because Apple Intelligence iOS 26 emphasizes low-latency, on-device processing.

Will it work offline?

Some features do, but real-time translation generally improves online. Download offline packs and star essential phrases for Apple Intelligence iOS 26 travel days.

How do I keep privacy tight?

Keep on-device-first defaults, review permission prompts, and let Apple Intelligence iOS 26 escalate to Private Cloud Compute only when tasks exceed device limits.

What’s the simplest productivity win?

Summaries + tone-aware rewrites. Many people recover 15–20 minutes daily using these in Apple Intelligence iOS 26.

What if my primary language pair isn’t supported yet?

Support expands with point releases. Meanwhile, keep a quick sheet of key phrases, and test the closest supported pair in Apple Intelligence iOS 26.

Conclusion

Apple Intelligence iOS 26 is not spectacle—it’s friction removed. The email you finish sooner, the multilingual chat that keeps dignity, the summary that returns ten minutes to your day. If you enjoy the frontier and your setup is compatible, upgrade now and let Apple Intelligence iOS 26 earn its place. If stability is mission-critical, wait for 26.1—the same privacy-respecting foundation, with extra polish. புதிய தொழில்நுட்பம் உங்களைச் சிரமப்படுத்தாமல், உங்களைச் சுலபப்படுத்த வேண்டும்.

Other Reading on NestOfWisdom (Internal Links)

- Moon in Aries – 11 Powerful Insights for Fiery Emotional Mastery

- Life Path Number 3 – Creative Personality Traits, Love & Career

- Tuesday Fasting (Mangalvar Vrat)

- Monday Fasting (Somvar Vrat)

Nest of Wisdom Insights is a dedicated editorial team focused on sharing timeless wisdom, natural healing remedies, spiritual practices, and practical life strategies. Our mission is to empower readers with trustworthy, well-researched guidance rooted in both Tamil culture and modern science.

இயற்கை வாழ்வு மற்றும் ஆன்மிகம் சார்ந்த அறிவு அனைவருக்கும் பயனளிக்க வேண்டும் என்பதே எங்கள் நோக்கம்.

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

Related posts

Today's pick

Recent Posts

- Internal Linking Strategy for Blogs: A Practical, Human-Centered Playbook

- AI in the Automotive Industry: A Practical, Human-Centered Guide

- Cloud Tools for Small Businesses and Freelancers: The Complete Guide

- Generative AI in Business: Real-World Use Cases, Benefits & Risks

- 7 Life-Changing Daily Habits for Weight Loss Without Dieting