AI Copilots at Work are no longer experimental—they’re becoming the default interface for modern knowledge work. Embedded assistants inside email, docs, sheets, IDEs, CRMs, and wikis now draft content, summarize meetings, answer questions across your knowledge base, and generate code or tests. For small teams, AI Copilots at Work act like a force multiplier: they reduce blank-page time, compress meetings, standardize workflows, and surface insights faster—when paired with clear guardrails and measurable outcomes. Educational content only—not legal, security, or financial advice.

Table of Contents

- What Are AI Copilots at Work?

- Why Now: Evidence & Momentum

- 9 Benefits of AI Copilots at Work

- Role Playbooks: Daily Use with AI Copilots at Work

- Anonymized Case Studies Using AI Copilots at Work

- Limits & Risks You Must Manage

- Implementation Roadmap for AI Copilots at Work (90-Day Plan)

- Prompt & Template Design Principles

- Source-of-Truth Setup

- Governance & Compliance for AI Copilots at Work

- Evaluation & ROI for AI Copilots at Work

- Adoption & Change Management

- FAQs

- Conclusion & Next Steps

- References

What Are AI Copilots at Work?

“Copilot” describes an AI assistant embedded directly in the tools you already use: Microsoft 365, Google Workspace, GitHub, Notion, help desks, CRMs, and more. These copilots draft emails, briefs, and SOPs; summarize meetings and chats; answer questions from your documents; create code and tests; and assemble charts or executive summaries from raw data. When implemented with simple playbooks, AI Copilots at Work elevate both throughput and quality while keeping control with the human reviewer.

What they’re not: they don’t replace human judgment. They can be wrong, miss nuance, or leak data if used carelessly. Authentic ROI comes from pairing copilots with clear “do/don’t” rules, strong sourcing, and a scoreboard you review monthly.

Why Now: Evidence & Momentum

Independent studies and enterprise pilots report faster task completion, higher developer satisfaction, and reduced cycle time. For small teams with thin headcount, AI Copilots at Work convert saved minutes into throughput (more done) and quality (better done). Leaders that standardize prompts and reviews see durable gains—because everyone ships to the same structure, with fewer re-writes and faster approvals.

Related reads: Generative AI ROI Report, Cloud Computing Benefits for Businesses, Green Tech in the Cloud, Cybersecurity in the Age of AI, and the hub Smart Tech Tips for Life.

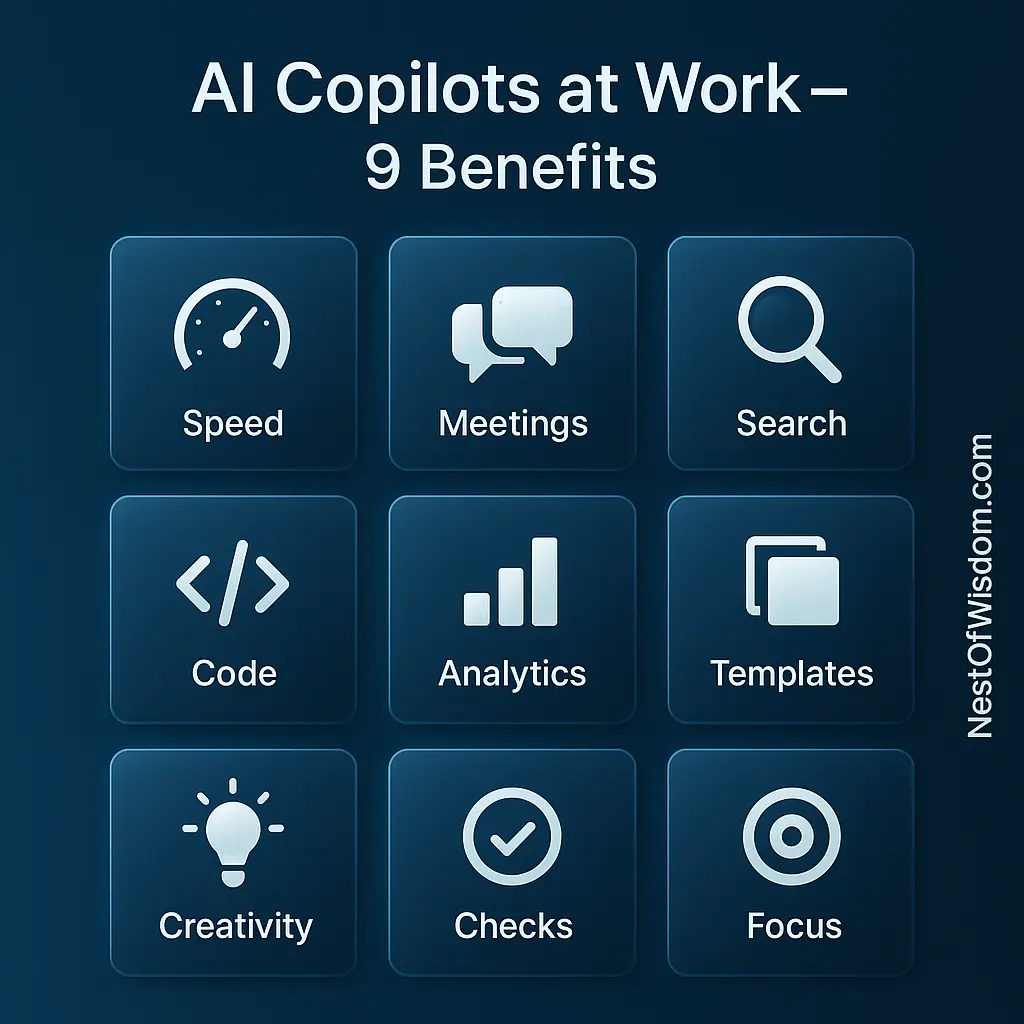

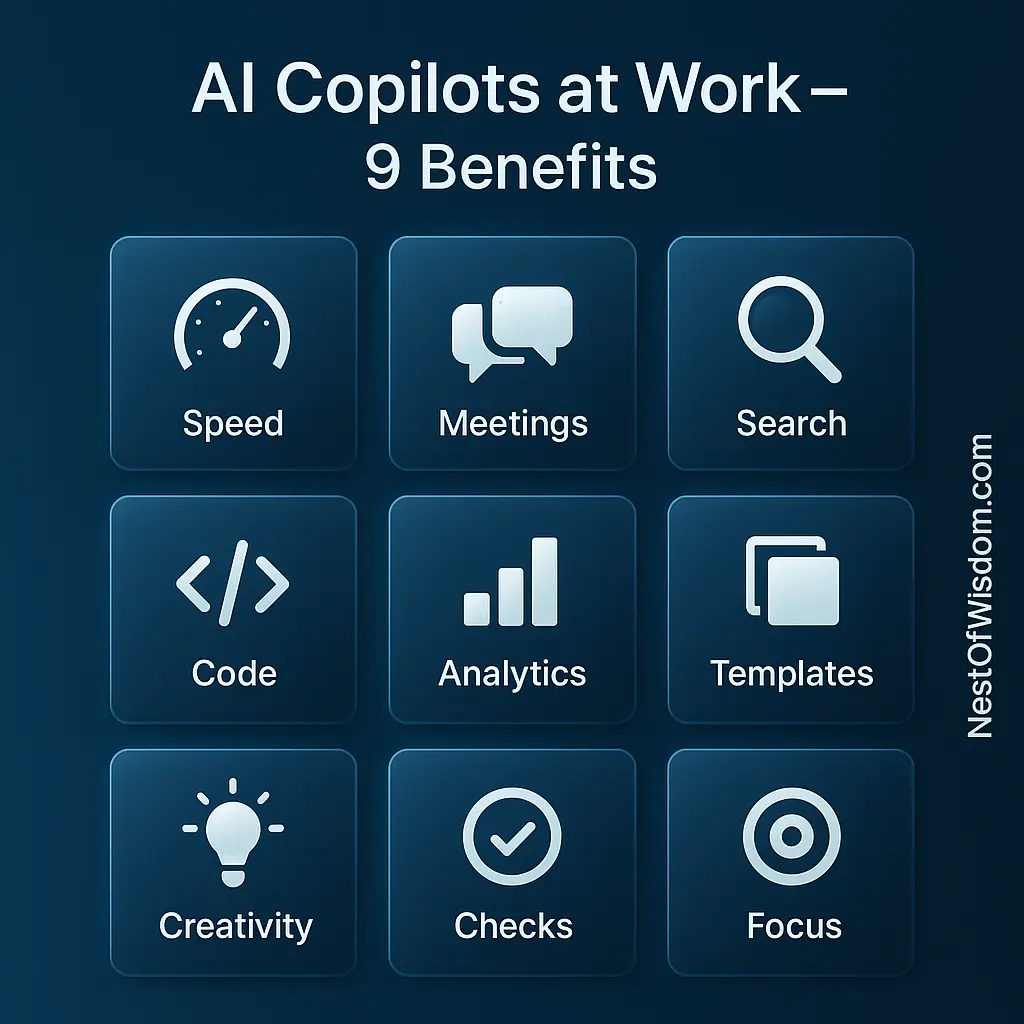

9 Benefits of AI Copilots at Work

These nine wins are the most repeatable across industries. Use them as your pilot “north stars.”

- Speed to first draft: outlines, emails, briefs, slide notes in minutes—so humans spend time on judgment and nuance.

- Meeting compression: auto-summaries with action items and owners reduce follow-up drift; decisions are captured in one place.

- Contextual search: ask questions across docs, tickets, wikis, and prior meetings with reference links back to sources.

- Coding acceleration: suggestions, tests, and boilerplate free seniors for design; juniors learn by comparison.

- Analytics & reporting: turn raw tables into charts and executive-ready summaries, faster than spreadsheet wrangling.

- Process standardization: prompt templates + review checklists = consistent outputs across teams and time zones.

- Creativity boost: brainstorm variations; avoid blank-page paralysis with structured idea exploration.

- Error reduction: draft checklists catch misses earlier; fewer last-minute escalations.

- Focus time: offload routine tasks so people decide, negotiate, and build relationships.

Role Playbooks: Daily Use with AI Copilots at Work

Sales pod (3 reps + lead). The assistant creates call briefs from CRM + notes, drafts recap emails, and proposes mutual-action plans. The lead reviews tone and accuracy. Over a quarter, follow-up latency drops and opportunities advance faster—clear proof that AI Copilots at Work free time for conversations that close.

Support desk. Troubleshooting steps become suggested replies linked to KB articles. When patterns emerge, the assistant drafts a reusable solution. Escalations include a one-screen summary with logs, hypotheses, and next tests—tightening the loop with AI Copilots at Work.

Marketing trio. The assistant drafts briefs, UTM-consistent posts, and A/B headlines. A “golden examples” library sets voice rules and brand snippets. Monthly reviews retire low-value prompts and promote winners—an enablement habit that strengthens AI Copilots at Work returns.

Engineering squad. Boilerplate and tests accelerate; “review prompts” clarify intent and edge cases. Seniors focus on design and threat modeling; juniors learn faster by comparing their draft to suggestions—craft skills sharpen with AI Copilots at Work.

Anonymized Case Studies Using AI Copilots at Work

Consulting boutique (4 people). Proposal and recap templates save 6–8 hours per consultant per week. A phrase bank improves tone consistency; a reviewer checklist reduces client back-and-forth—sustained gains from AI Copilots at Work.

Seed-stage software team. Boilerplate, tests, and refactors speed up; cycle time for repetitive tasks falls. The team logs “before/after” pull-request times to quantify impact—evidence that AI Copilots at Work pay off beyond anecdotes.

Nonprofit operations. Board packets get summarized, actions extracted, and update drafts prepared. Meetings shorten, deadlines clarify, and admin overhead drops. A one-page usage policy prevents accidental sharing of donor data—safe practices with AI Copilots at Work.

Limits & Risks You Must Manage

- Accuracy & hallucinations: always review; require sources or snippets for claims.

- Data leakage: classify info; block sensitive fields; prefer enterprise plans with logs.

- Privacy & IP: clarify ownership; confirm vendor retention and model-training policies.

- Over-reliance: keep humans in the loop for intent, decisions, and sign-off.

- Shadow AI: unapproved tools creep in without training and policy—enable the approved path early.

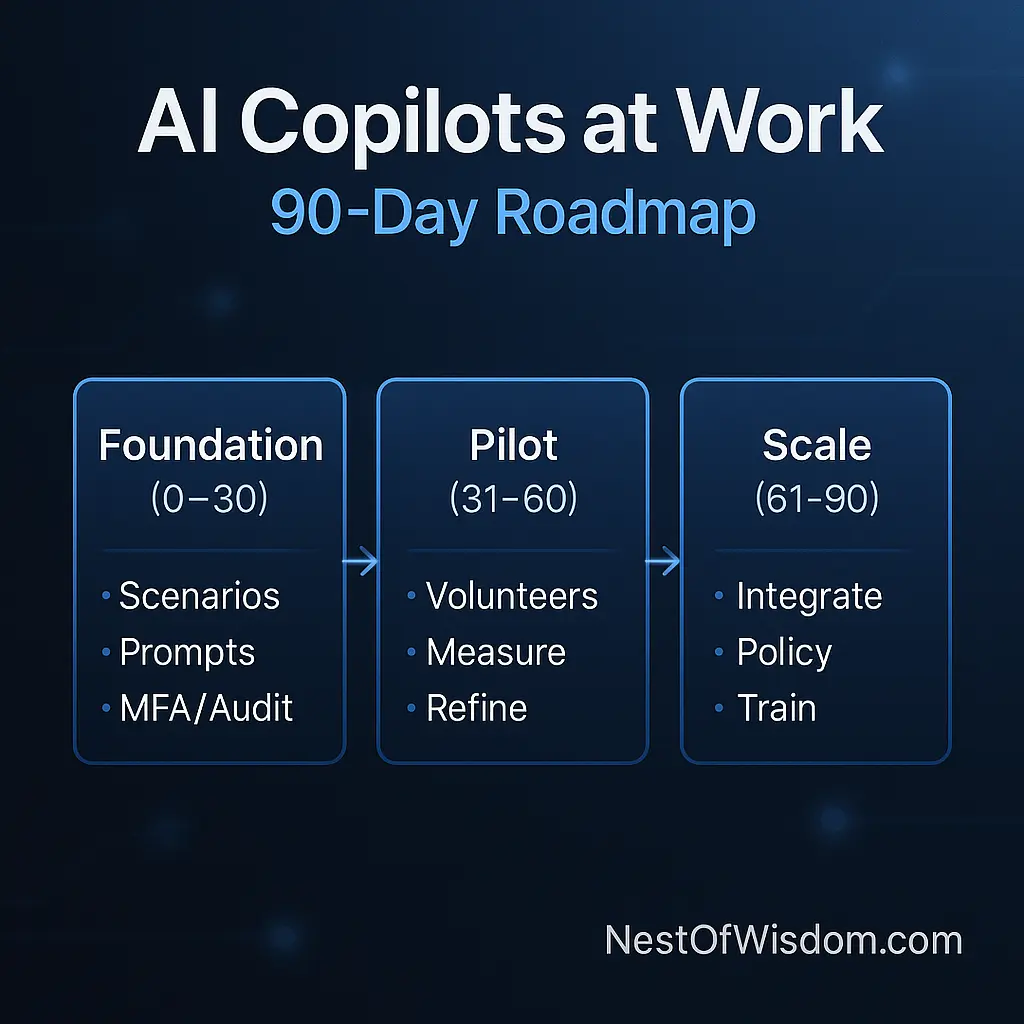

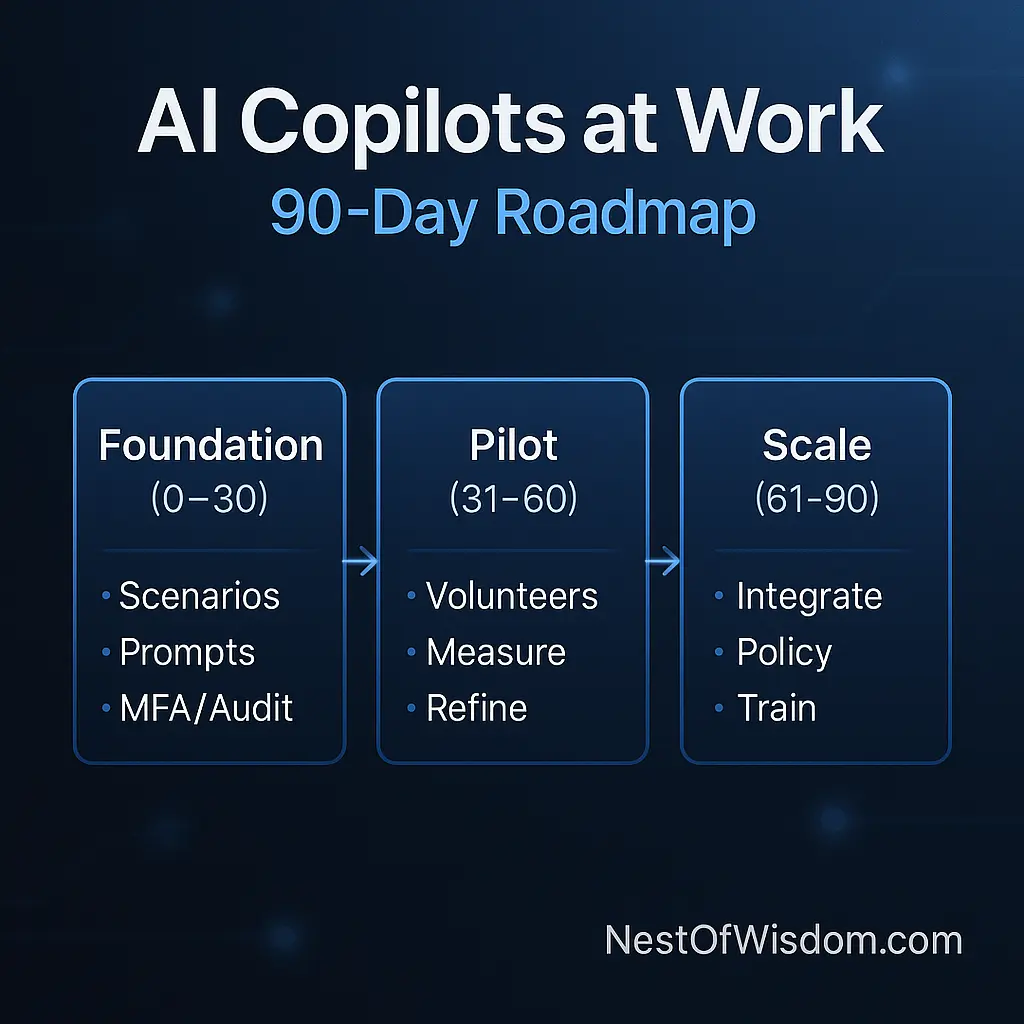

Implementation Roadmap for AI Copilots at Work (90-Day Plan)

Use a three-phase rollout that proves value, builds trust, and scales only after you have measurement and guardrails. This cadence de-risks adoption while showcasing quick wins from AI Copilots at Work.

Days 0–30: Foundation

- Pick 3 high-value scenarios (meeting notes, client emails, code boilerplate).

- Create prompt templates and review checklists (purpose, audience, tone, sources).

- Enable MFA, restrict sensitive data in prompts, and turn on audit logs.

- Publish a one-page “how we use AI Copilots at Work” policy with examples.

Days 31–60: Pilot

- Run a 2-week pilot with 5–10 volunteers; collect baseline vs pilot time.

- Measure time saved, quality score, and rework rate for the scenarios powered by AI Copilots at Work.

- Refine prompts with “golden examples” and add do/don’t patterns; archive what doesn’t move the needle.

Days 61–90: Scale

- Expand to adjacent workflows (reports, ticket replies, doc Q&A).

- Integrate with your knowledge base; build a “source-of-truth” library the copilot can cite.

- Publish an AI usage policy; schedule quarterly refresh training and office hours.

Prompt & Template Design Principles

Authentic results come from prompt engineering that mirrors your real process. Standardize these elements so outputs become reliable—no matter who prompts.

- Purpose first: “Write a client-ready summary” beats “summarize.” State audience, length, and outcome.

- Source-anchored: point to exact docs or repo paths; ask for quoted snippets or footnotes for claims.

- Structure: provide the skeleton (headings, bullets, tone) so outputs are consistent across teams.

- Checklists: build “review prompts” that critique the draft against your checklist before hand-off.

- Examples win: keep 2–3 “golden examples” to define voice, formatting, and acceptable risk.

Prompt Template — Client Recap Email

Purpose: Summarize today’s call for the client in 200–250 words.

Audience: Executive sponsor + project manager.

Sources: /docs/meeting-notes/2025-08-26.md; CRM#OPP-231.

Tone: Clear, confident, collaborative.

Structure: 1) What we heard, 2) Decisions, 3) Action items (owner+date), 4) Next meeting.

Checklist: Remove internal jargon; confirm dates; include 1 line of gratitude.Source-of-Truth Setup

Assistants are only as good as the information they can reach. Curate a small, high-quality set of sources and keep it tidy.

- Library: policies, product briefs, style guides, onboarding docs, “how we sell,” “how we support,” and architectural decisions.

- Naming: human-readable file names + version stamps (e.g.,

Pricing-Play-2025-08). - Lifecycle: monthly cleanup; archive stale content; pin the 20% you use weekly.

- Access: least-privilege—share only what the copilot needs to answer questions well.

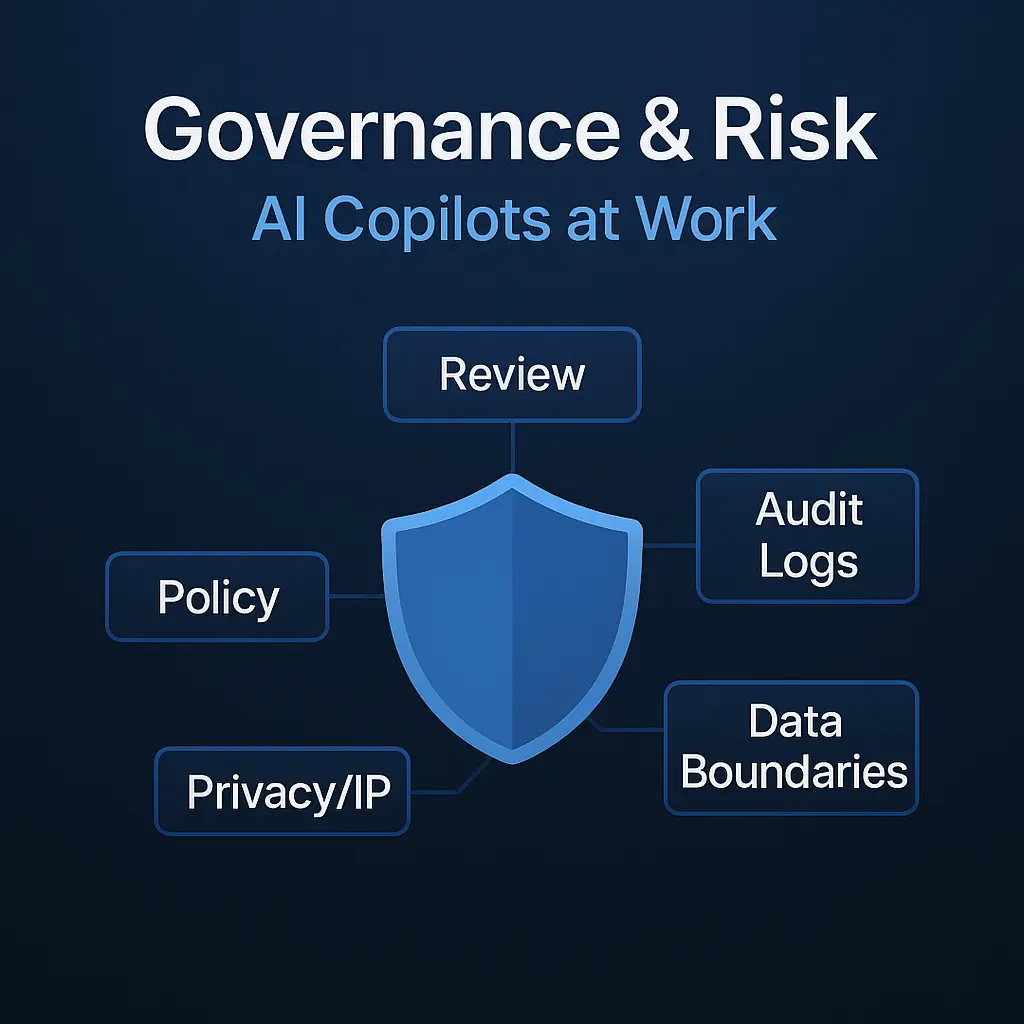

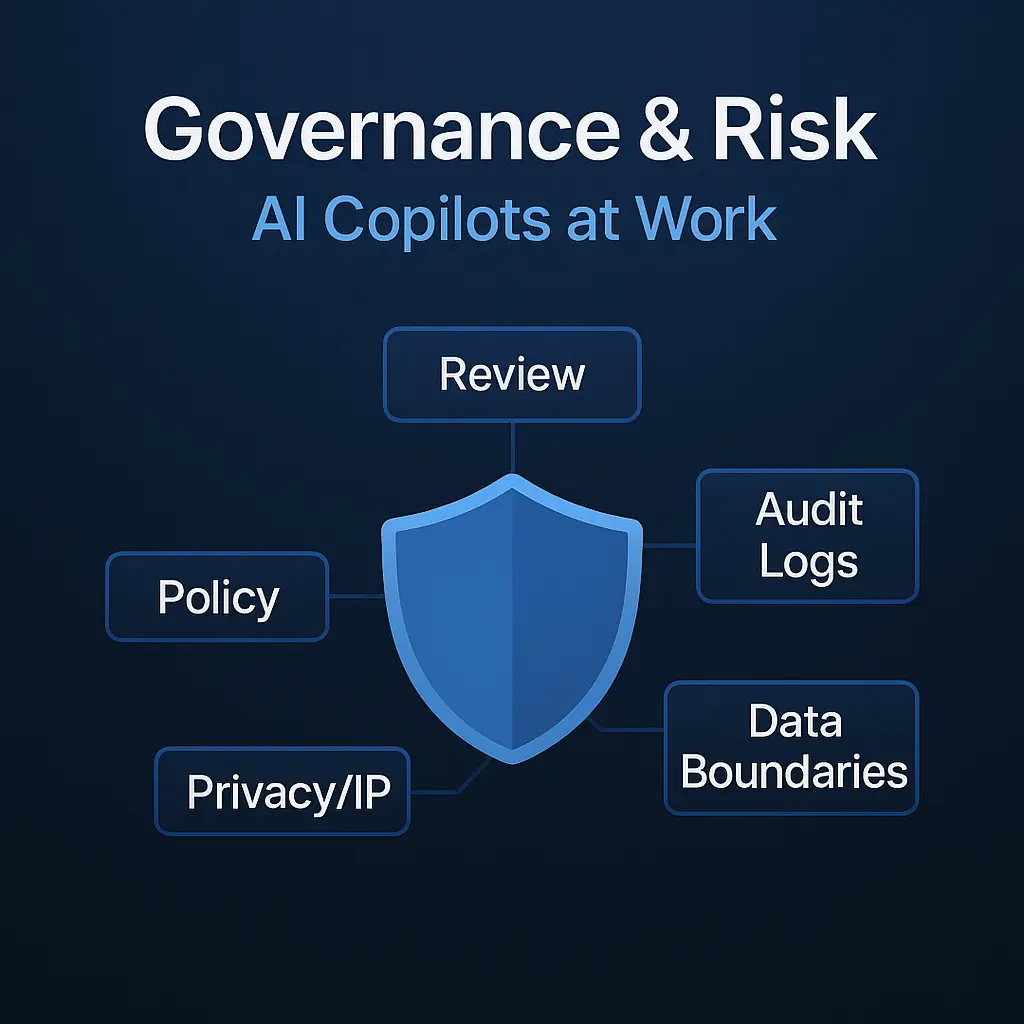

Governance & Compliance for AI Copilots at Work

Governance should be lightweight but explicit. Map controls to recognized frameworks and keep them human-centered—this is how you scale AI Copilots at Work safely without slowing delivery.

- Policy: approved tools, use-cases, and data classes; what never goes into prompts.

- Review: mandatory human review for external-facing content or code merges.

- Audit logs: who prompted what, when, with which sources (for learnings and compliance).

- Privacy/IP: data retention controls; vendor commitments; export/delete pathways.

- Data boundaries: mask/redact sensitive fields; disable training on your data if required.

Anchor governance in the NIST AI Risk Management Framework and the OECD AI Principles.

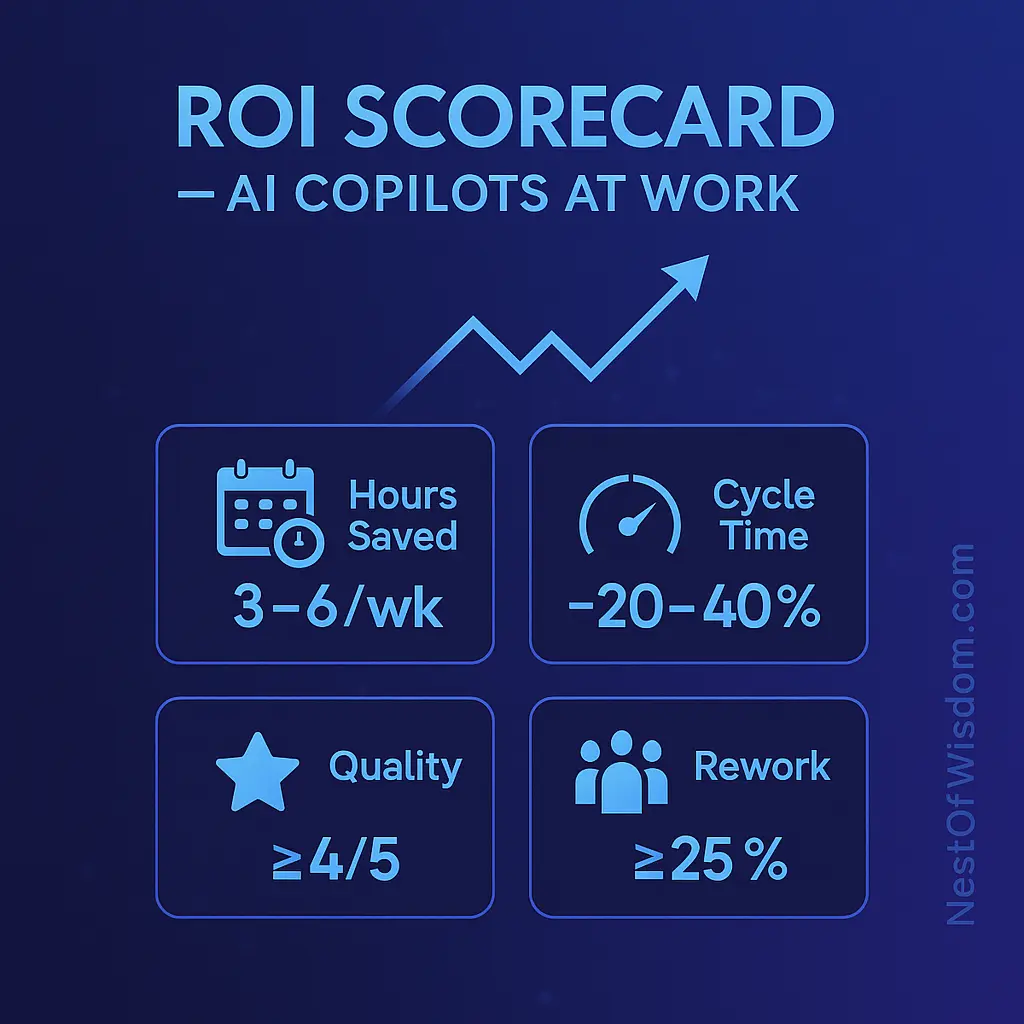

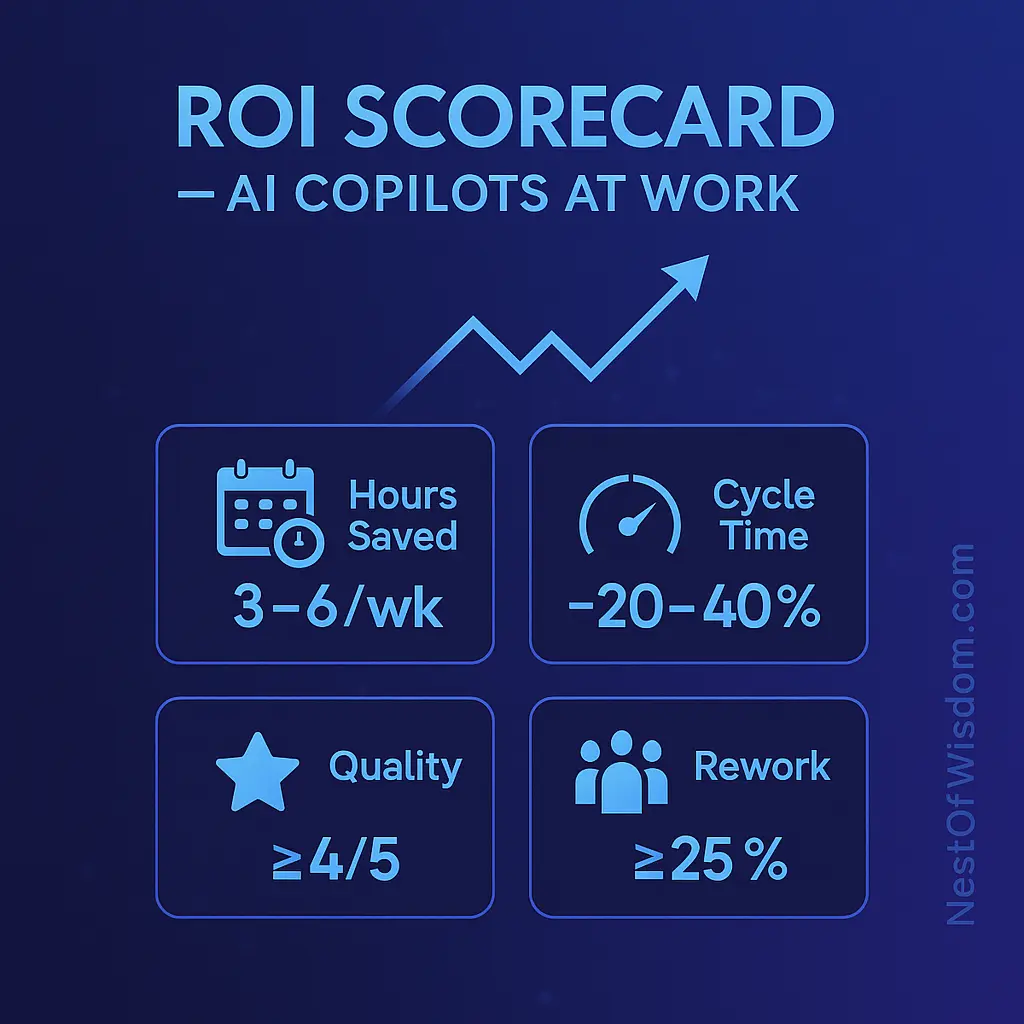

Evaluation & ROI for AI Copilots at Work

Leaders need proof that these tools are more than a novelty. Combine a scoreboard with short experiments so improvements compound month over month. A simple, shared dashboard keeps the story visible.

| Metric | Target | How to Measure |

|---|---|---|

| Hours saved per user/week | 3–6 | Self-report + time tracking vs baseline |

| Cycle time reduction | 20–40% | Task timestamps (issue tracker/PRs) |

| Quality score | ≥ 4/5 | Reviewer checklist rating |

| Rework rate | −25% | Number of revisions per artifact |

| Adoption | ≥ 70% | Weekly active users / eligible users |

Prompt A/B Rubric (10-point scale)

- Faithfulness (0–2): grounded in cited sources?

- Clarity (0–2): scannable structure for the target audience?

- Actionability (0–2): unambiguous next steps?

- Tone fit (0–2): matches brand voice?

- Effort saved (0–2): minutes shaved vs baseline?

Adoption & Change Management

- Enablement beats policy: share 5–10 “golden prompts” per role and a one-page checklist. Policy without examples drives shadow AI, which undermines AI Copilots at Work.

- Office hours: weekly 30-minute session to refine prompts, share wins, and retire duds—keeps AI Copilots at Work sharp.

- Ethics & bias: ask the assistant to “explain reasoning” and “challenge assumptions.” Rotate reviewers to avoid blind spots.

- Celebrate public wins: short before/after notes keep momentum high and normalize usage.

FAQs

1) Do AI Copilots at Work replace employees?

No—these tools augment people. They compress routine work so humans focus on decisions, strategy, and relationships.

2) Which roles benefit most?

Writers, analysts, project managers, developers, sales, and support—anywhere repetitive drafting, summarizing, or analysis exists.

3) What data should never go into prompts?

Credentials, secrets, customer PII, protected health data, legal drafts not approved for external systems, and anything your policy forbids. Prefer enterprise plans with admin controls and logs.

4) How do we avoid “AI-written fluff”?

Use structured prompts (purpose, audience, tone, data sources), require reviewer checklists, and ask for citations to sections used.

5) How soon will we see results?

Most teams see quick wins in 2–4 weeks when they start with three scenarios, adopt shared prompts, and measure time saved and rework.

Conclusion & Next Steps

AI Copilots at Work deliver authentic ROI when introduced with intention: pick a few high-value scenarios, standardize prompts and reviews, measure outcomes, and scale only what works. Pair governance with enablement—give people examples, not just rules. For broader context, explore Generative AI ROI, Cloud Benefits, Green Tech in the Cloud, and Cybersecurity in the Age of AI. Small teams can do more with less—safely, measurably, and human-first with AI Copilots at Work.

References

- GitHub × Accenture — Enterprise impact of Copilot

- Academic study — Developers using Copilot completed tasks faster (controlled experiment)

- GitHub survey — Adoption and developer sentiment

- NIST — AI Risk Management Framework

- OECD — AI Principles

Nest of Wisdom Insights is a dedicated editorial team focused on sharing timeless wisdom, natural healing remedies, spiritual practices, and practical life strategies. Our mission is to empower readers with trustworthy, well-researched guidance rooted in both Tamil culture and modern science.

இயற்கை வாழ்வு மற்றும் ஆன்மிகம் சார்ந்த அறிவு அனைவருக்கும் பயனளிக்க வேண்டும் என்பதே எங்கள் நோக்கம்.

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

Related posts

Today's pick

Recent Posts

- Internal Linking Strategy for Blogs: A Practical, Human-Centered Playbook

- AI in the Automotive Industry: A Practical, Human-Centered Guide

- Cloud Tools for Small Businesses and Freelancers: The Complete Guide

- Generative AI in Business: Real-World Use Cases, Benefits & Risks

- 7 Life-Changing Daily Habits for Weight Loss Without Dieting