AGI vs ASI shows up in board decks, sprint reviews, and industry chatter—but many teams still lack a practical, business-ready explanation. What exactly do these terms mean? How far are we? And what risks and opportunities should leaders act on today? This executive guide breaks down AGI vs ASI in plain language, then translates it into governance, controls, benchmarks, scenarios, and a 90-day roadmap any organization can implement without hype. In short, AGI vs ASI is the lens leaders can use to separate science from speculation.

தமிழில் சொன்னால்: “AGI” மனித அளவுக்கு சமமான பொதுத் திறன்; “ASI” மனிதரை விட மேலான மிகுத்த நுண்ணறிவு—இரண்டையும் புரிந்து கொண்டால் தான் நம் தொழிலில் சரியான முடிவுகள் எடுக்கலாம்.

Table of Contents

- 1) What AGI and ASI Actually Mean

- 2) Why AGI vs ASI Matters for Business

- 3) AGI vs ASI: Side-by-Side Comparison

- 4) Where We Are Today: Reality vs Hype

- 5) Measuring Progress: AGI vs ASI Benchmarks, Evals & Signals

- 6) Business Impact: What Changes First

- 7) Talent, Org Design & New Roles

- 8) Vendor Selection & Procurement Guardrails

- 9) Governance, Risk & Controls That Actually Work

- 10) Regulation Watch: EU AI Act & Global Trends

- 11) Timelines & Scenarios Leaders Should Plan For

- 12) Incident Response & Red-Team Playbooks

- 13) 90-Day Executive Roadmap

- AGI vs ASI – FAQs

- Conclusion: AGI vs ASI in Perspective

- Further Reading & References

1) What AGI and ASI Actually Mean

Artificial General Intelligence (AGI) is the idea of a system with human-level generality. It learns new domains, transfers knowledge across tasks, plans, reasons, and adapts without being retrained from scratch. Ask a human to switch from drafting a policy to debugging code and then to designing a survey—they can attempt all three; AGI aspires to that flexible competence.

Artificial Superintelligence (ASI) goes beyond human capability—consistently outperforming the best experts across science, strategy, and creativity. ASI is still theoretical, but it matters for risk and governance conversations because it implies capabilities that could outpace current oversight models. When leaders debate AGI vs ASI, they’re comparing human-level general intelligence vs far-beyond-human intelligence.

தமிழ் சுருக்கம்: AGI = மனித அளவிலான பொது திறன்; ASI = மனிதனை விட அதிகமான நுண்ணறிவு. இரண்டையும் வேறுபடுத்திப் பார்க்கும் பார்வை தான் சரியான கொள்கைகள் அமைக்க உதவும்.

2) Why AGI vs ASI Matters for Business

You don’t need confirmed AGI or ASI to realize value. Framing the landscape as AGI vs ASI helps leaders separate the real from the speculative. Today’s ROI comes from disciplined, safe use of advanced systems in copilots, analytics, and automation. Long-term governance—transparency, oversight, and risk management—keeps you future-ready if capabilities accelerate.

For hands-on value, see near-term guides like AI Copilots at Work and Best AI Productivity Tools (2025). These are wins you can ship this quarter—no AGI required.

3) AGI vs ASI: Side-by-Side Comparison

| Aspect | AGI | ASI |

|---|---|---|

| Core capability | Human-level, general-purpose learning and adaptation | Beyond human in knowledge, reasoning, and creativity |

| Status today | Concept under active research; not confirmed | Theoretical / speculative |

| Primary opportunities | Broad copilots, autonomous assistance, resilient automation | Potentially transformative discoveries and strategies |

| Key risks | Bias, hallucinations, misuse, data leakage, safety | Systemic control, alignment, societal-level risks |

| Governance demand | Strong but feasible with current frameworks | Would require stronger international coordination |

4) Where We Are Today: Reality vs Hype

Modern multimodal models appear “general” because they perform well across many tasks. Pragmatically, organizations should treat them as advanced ANI: powerful pattern learners with limits in reliability and grounded reasoning. This mindset avoids overpromising and keeps teams focused on verifiable outcomes.

What’s working now? The Generative AI ROI analysis shows measurable gains in productivity, support resolution times, and developer efficiency using today’s systems—no AGI required. Likewise, foundations covered in Cloud Computing Benefits for Businesses enable secure scaling of AI workloads and data governance.

தமிழ் குறிப்பை: “இப்போது கிடைப்பது போதுமான பலன் தரும்—மிகை எதிர்பார்ப்பால் திட்டங்கள் தள்ளிப்போகவிடாதீர்கள்.”

5) Measuring Progress: AGI vs ASI Benchmarks, Evals & Signals

Leaders often ask, “How will we know when AGI vs ASI is close?” There’s no single switch. Instead, look for a basket of signals:

- Generalization across novel tasks: zero-shot performance on tasks outside training distributions, with robust reasoning traces.

- Tool-using competence: autonomous orchestration of APIs, code, and retrieval to achieve multi-step goals with auditability.

- Long-horizon planning: consistent success on tasks requiring multi-day or multi-week planning with minimal human nudging.

- Self-critique & improvement: models that reliably diagnose their own failure modes and repair prompts/approaches without being steered.

In practice, your organization doesn’t need a global benchmark to move. Build an evaluation sheet aligned to your work: task accuracy, response latency, safety flags (toxicity/leakage), and business KPIs—then monitor trend lines weekly.

6) Business Impact: What Changes First

6.1 Knowledge Work Acceleration

Copilots draft content, summarize long threads, and suggest code or tests. The fastest wins come from embedding copilots into daily apps—email, docs, IDEs, CRM—paired with clear rules. For adoption playbooks and tool picks, see AI Copilots at Work and AI Productivity Tools.

6.2 Decision Intelligence & Analytics

Retrieval-augmented analytics and natural-language BI let teams query data without complex SQL. Grounding model outputs in your own data reduces hallucinations and helps validate answers. The AGI vs ASI debate is less relevant here than consistent upstream data hygiene and access control.

6.3 Customer Experience

AI boosts CX with intent classification, triage, and proactive help. A “right to a human” escalation path keeps trust high. Track CSAT, handle time, and first-contact resolution so leaders can expand what truly works.

6.4 Secure Automation & Ops

Automation pipelines route routine work and free experts for judgment calls. But security must grow in parallel—see Cybersecurity in the Age of AI for practical defenses (input validation, access control, red-teaming) that apply immediately, regardless of AGI vs ASI timelines.

6.5 Cloud & Sustainability Foundations

Well-architected cloud helps manage model hosting, vector indexes, and governance. Sustainability matters too; energy-aware architectures like those discussed in Green Tech in the Cloud align cost, performance, and ESG goals.

7) Talent, Org Design & New Roles

AI doesn’t replace teams; it reshapes them. Framing org changes through AGI vs ASI helps scope responsibilities without hype. Expect these roles to emerge:

- AI Product Owner: translates business outcomes to prompts, policies, and metrics.

- AI QA / Red Team: adversarial testers who probe for failure modes, bias, and leakage.

- Prompt Librarian: curates “golden examples,” versions changes, and manages reuse.

- Data Steward: owns lineage, retention, and minimization practices.

- Governance Lead: coordinates policy, audits, model factsheets, and transparency reports.

Practical tip: start small. Embed one AI product owner per pilot domain, share a weekly “wins & duds” note, and rotate reviewers to avoid blind spots.

தமிழ் துளி: “சிறிய அணியிலும் ‘ஒருவர் பொறுப்பு’ என்ற பக்கவாட்டில் செயல்படுங்கள்—பயன் விரைவில் தெரியும்.”

8) Vendor Selection & Procurement Guardrails

Before you sign, require vendors to answer a short, consistent AI Due-Diligence Checklist:

- Data handling: retention policy, training on customer data (on/off), encryption at rest/in transit.

- Access controls: SSO/MFA, role-based permissions, audit log export.

- Model transparency: base models used, update cadence, change-log communication.

- Evaluation evidence: task-level accuracy, safety filters, abuse safeguards, red-team results.

- Compliance posture: alignment with NIST AI RMF, data residency options, incident SLAs.

Standardize this as a 2-page appendix to your MSAs so procurement remains fast but safe.

9) Governance, Risk & Controls That Actually Work

Good governance turns AGI vs ASI from an abstract debate into practice. A minimal, effective stack includes:

- Purpose & Limits: document use case, users, acceptable error rates, and red-lines. Be explicit about where AI is assistive vs authoritative.

- Human Oversight: require review for sensitive actions (customer communications, finance, HR). Publish escalation paths.

- Data Governance: classify data, minimize exposure, apply masking/retention, maintain an audit trail. Build on cloud basics.

- Security: harden prompts/contexts, detect prompt injection, sandbox tool use, monitor for exfiltration. Pair with AI cybersecurity guidance.

- Evaluation: track task metrics (accuracy/latency), safety metrics (toxicity/leakage), and business KPIs together.

- Lifecycle & Versioning: version models and prompts, document changes, monitor drift. Treat prompts like code—reviewed & tested.

- Transparency: provide notices where appropriate, label AI-assisted outputs, and maintain a model factsheet (capabilities, constraints, evaluation results).

Align with the NIST AI Risk Management Framework and keep a living governance doc that product, data, and security evolve together.

தமிழ் நினைவூட்டு: “எல்லாவற்றையும் எழுதிப் பராமரிப்பதே பாதுகாப்பின் அடித்தளம்.”

10) Regulation Watch: EU AI Act & Global Trends

The EU AI Act phases in obligations over several years, with near-term rules on prohibited uses and transparency, and staged requirements for general-purpose AI (GPAI). Even if you don’t operate in the EU, the AGI vs ASI debate influences best practices around documentation, transparency reports, and evaluations. Build your documentation muscle now—model facts, data lineage, evaluation results—so compliance becomes an export of good engineering rather than a scramble later. See the Official EU AI Act Portal.

11) Timelines & Scenarios Leaders Should Plan For

Strategy thrives on scenario thinking. Use a tiered view and refresh quarterly to stay ready for AGI vs ASI timelines:

- Near Horizon (0–12 months): copilots expand; retrieval and workflow tools mature; governance templates normalize. Action: double down on enablement, metrics, and safe automation.

- Middle Horizon (1–3 years): stronger tool orchestration, longer context, steadier reasoning; more standardized transparency artifacts. Action: scale proven pilots; upgrade observability; invest in data quality.

- Speculative Horizon (3–7+ years): if AGI-like capacities emerge, expect tighter oversight, international coordination, and new assurance standards. Action: keep “break-glass” policies and board education ready.

தமிழில்: “கால எல்லைகள் தெளிவாக இருந்தால் திடீர் அதிர்ச்சிகள் குறையும்.”

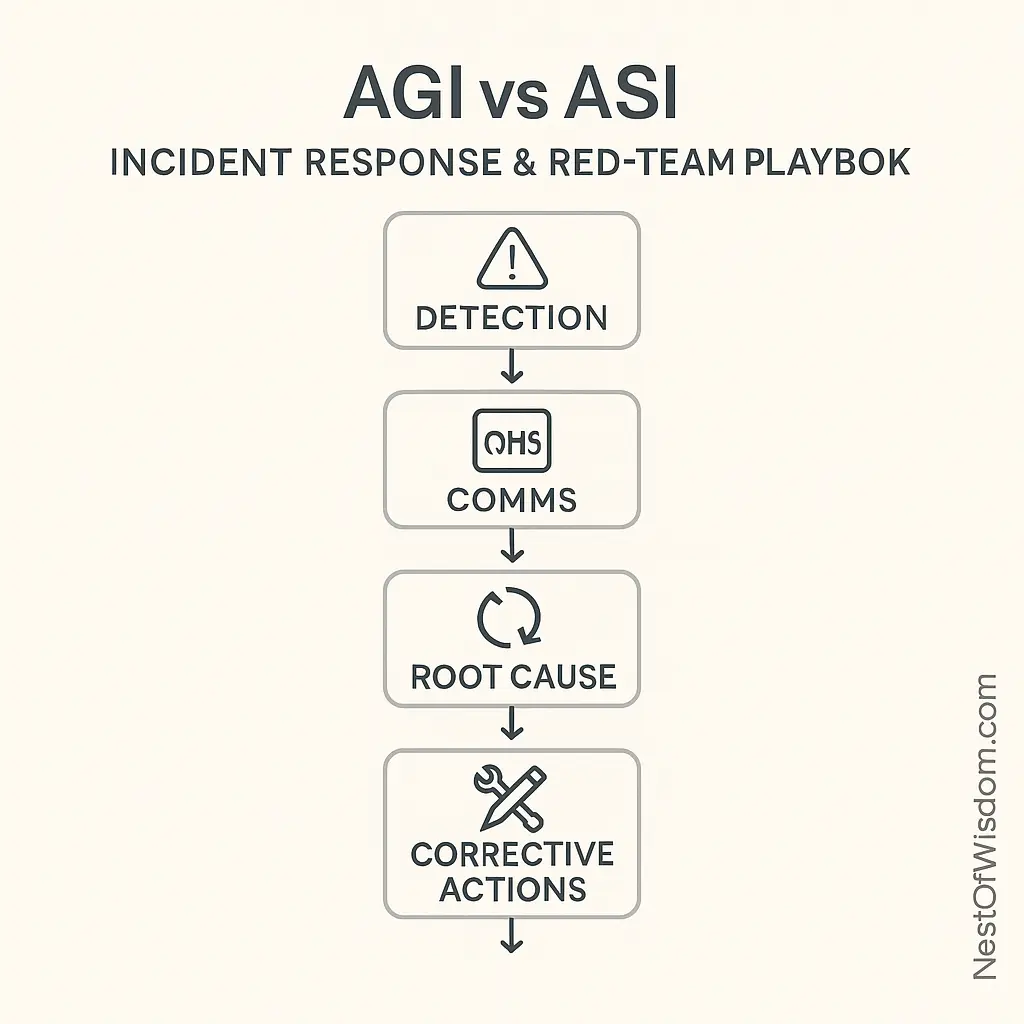

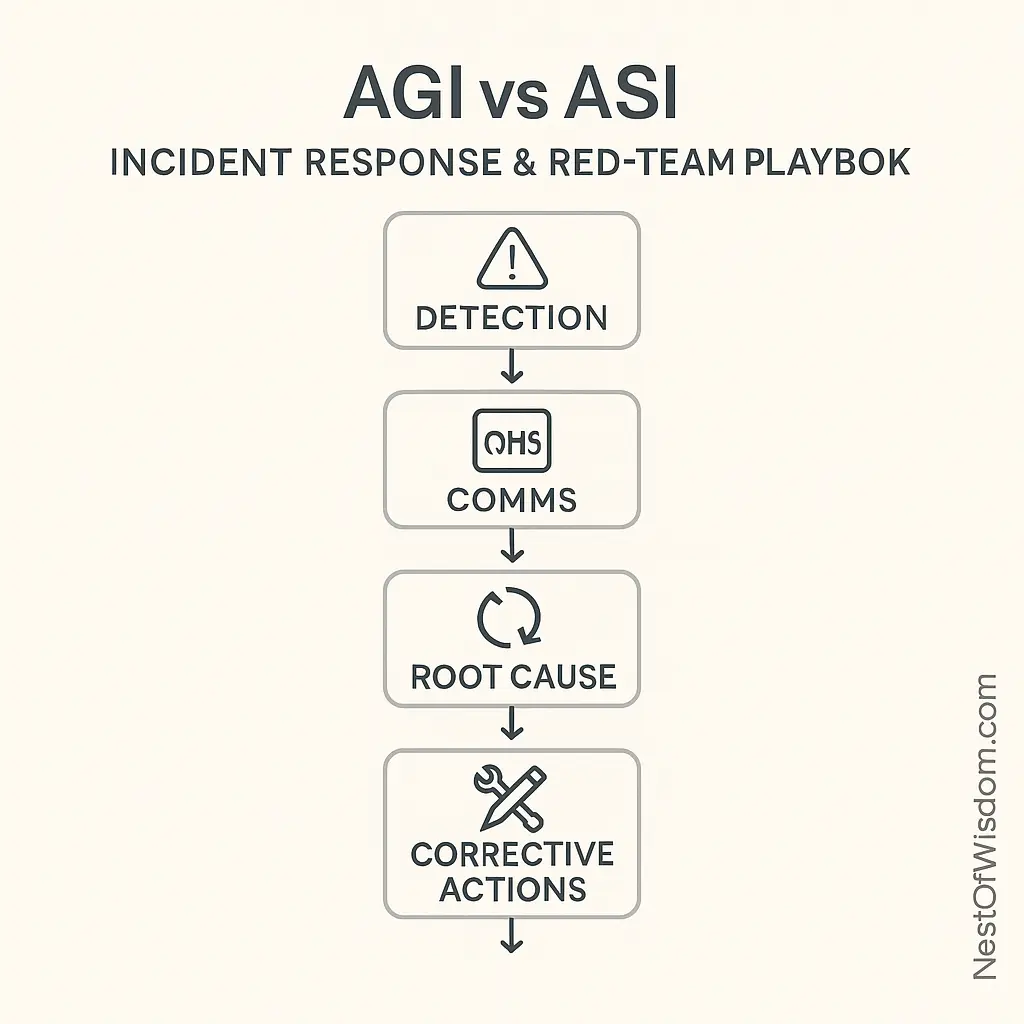

12) Incident Response & Red-Team Playbooks

AI incidents rarely look like classic outages. Prepare for:

- Content integrity: undisclosed AI-generated outputs or hallucinated claims reaching customers.

- Data exposure: prompts/contexts leaking sensitive data to logs or third-party tools.

- Tool misuse: over-permissioned actions (e.g., mass emails, repo writes) triggered by flawed instructions.

Playbook skeleton: detection → containment (disable tools, rotate keys) → stakeholder comms → evidence capture → root-cause (prompt, retrieval, policy) → corrective actions (gates, filters, training) → postmortem with checklists updated.

13) 90-Day Executive Roadmap

Days 0–30: Baseline & Guardrails

- Pick 2–3 low-risk, high-leverage pilots (e.g., support summarization, internal search, code assist).

- Publish a 2-page AI policy: data do’s/don’ts, human-review triggers, vendor rules, and rate limits.

- Stand up logging and an evaluation sheet. Define KPIs before pilots begin.

Days 31–60: Pilot, Measure, Harden

- Ship to a small cohort; collect structured feedback weekly.

- Harden prompts and retrieval; add safety filters and escalation paths.

- Review cost/usage; set budgets and throttles.

Days 61–90: Prove & Scale

- Publish outcomes vs control, cost vs baseline, and risk findings.

- Productionize what worked; sunset what didn’t. Schedule quarterly governance reviews.

- Cross-link enablement resources—Copilots at Work and AI Productivity Tools—so teams can self-serve.

தமிழ் ஊக்கம்: “90 நாட்களில் அடித்தளம் உறுதி; அடுத்த காலாண்டில் அளவீடு—இதே வெற்றிக் கலை.”

AGI vs ASI – FAQs

1) Does AGI exist today?

No. There is no widely accepted benchmark or authority confirming AGI. Treat current systems as advanced ANI with impressive breadth but known limitations.

2) What’s the big difference in AGI vs ASI?

AGI targets human-level general intelligence; ASI implies capabilities beyond the best humans across virtually all cognitive tasks.

3) Will AGI replace jobs?

Expect task-level substitution and role redesign before wholesale replacement. New roles—governance, AI QA, orchestration—offset some displacement.

4) How should we prepare for ASI if it’s hypothetical?

By building governance today: human oversight, data controls, evaluation, transparency, and incident playbooks. These muscles are valuable regardless of speed to AGI vs ASI.

5) What are the biggest risks right now?

Hallucinations used without review, data leakage, biased outputs, prompt injection, and uncontrolled third-party tool access.

6) Where should we start experiments?

Choose workflows with high manual effort and low blast radius if errors occur (summaries, drafts, internal search). Measure before/after.

7) How do cloud choices affect AI rollout?

Cloud architecture influences cost, latency, and governance. See Cloud Computing Benefits for Businesses for foundational decisions that make AI safer and cheaper.

Conclusion: AGI vs ASI in Perspective

The AGI vs ASI conversation usefully separates two horizons: human-level generality and beyond-human capability. But progress doesn’t require certainty about either. The right move is to operationalize what works now—copilots, retrieval-grounded analytics, and guarded automation—while strengthening governance and documentation so you’re resilient to capability shifts. With smart guardrails, disciplined measurement, and a living policy, you capture near-term ROI and stay ready for whatever arrives next.

Further Reading & References

- NIST AI Risk Management Framework (AI RMF 1.0)

- European Union AI Act – Official Portal

- Stanford AI Index Report

Related reading on NestOfWisdom:

- AI Copilots at Work

- MIT Generative AI ROI Report

- Cybersecurity in the Age of AI

- Best AI Productivity Tools (2025)

- Cloud Computing Benefits for Businesses

- Green Tech in the Cloud.

Nest of Wisdom Insights is a dedicated editorial team focused on sharing timeless wisdom, natural healing remedies, spiritual practices, and practical life strategies. Our mission is to empower readers with trustworthy, well-researched guidance rooted in both Tamil culture and modern science.

இயற்கை வாழ்வு மற்றும் ஆன்மிகம் சார்ந்த அறிவு அனைவருக்கும் பயனளிக்க வேண்டும் என்பதே எங்கள் நோக்கம்.

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

- Nest of Wisdom Authorhttps://nestofwisdom.com/author/varakulangmail-com/

Related posts

Today's pick

Recent Posts

- Internal Linking Strategy for Blogs: A Practical, Human-Centered Playbook

- AI in the Automotive Industry: A Practical, Human-Centered Guide

- Cloud Tools for Small Businesses and Freelancers: The Complete Guide

- Generative AI in Business: Real-World Use Cases, Benefits & Risks

- 7 Life-Changing Daily Habits for Weight Loss Without Dieting